Use an Animated GIF to drive story-telling

- 3 minutes read - 601 words

Table of Contents

In the ever-evolving world of artificial intelligence, multi-modal large language models (LLMs) have emerged as a game-changer, transforming how we create and analyze content. These AI systems can process and generate information across various formats, including text, images, audio, and video. By leveraging multi-modal LLMs like GPT4 and combining them with APIs, we can create automated workflows that extract scenes from videos, recreate movies, and perform advanced video analysis. In this blog post, we’ll delve into the fascinating capabilities of multi-modal LLMs and explore their potential applications in content creation and video analysis.

Video-to-Scene

In video-to-scene technology, a scene refers to a brief sequence of events or actions within a video, typically comprising several beats or moments. It is essential to understand that uploading an entire movie or lengthy video into an AI system for processing might not be practical or efficient.

When working with video-to-scene technology, it is recommended that you focus on specific scenes or segments of interest within a video. By doing so, the AI can analyze and convert the chosen scene into an interactive 3D representation, enabling users to explore and engage with the content more effectively and dynamically.

Selecting shorter scenes or sequences for video-to-scene processing offers several advantages. It reduces the computational resources and time required for processing. It ensures that the AI can concentrate on the video’s most relevant and meaningful parts, returning a proper scene description.

Step-by-Step video re-creation

- Acuire a Video Sample

- Go to giphy.com

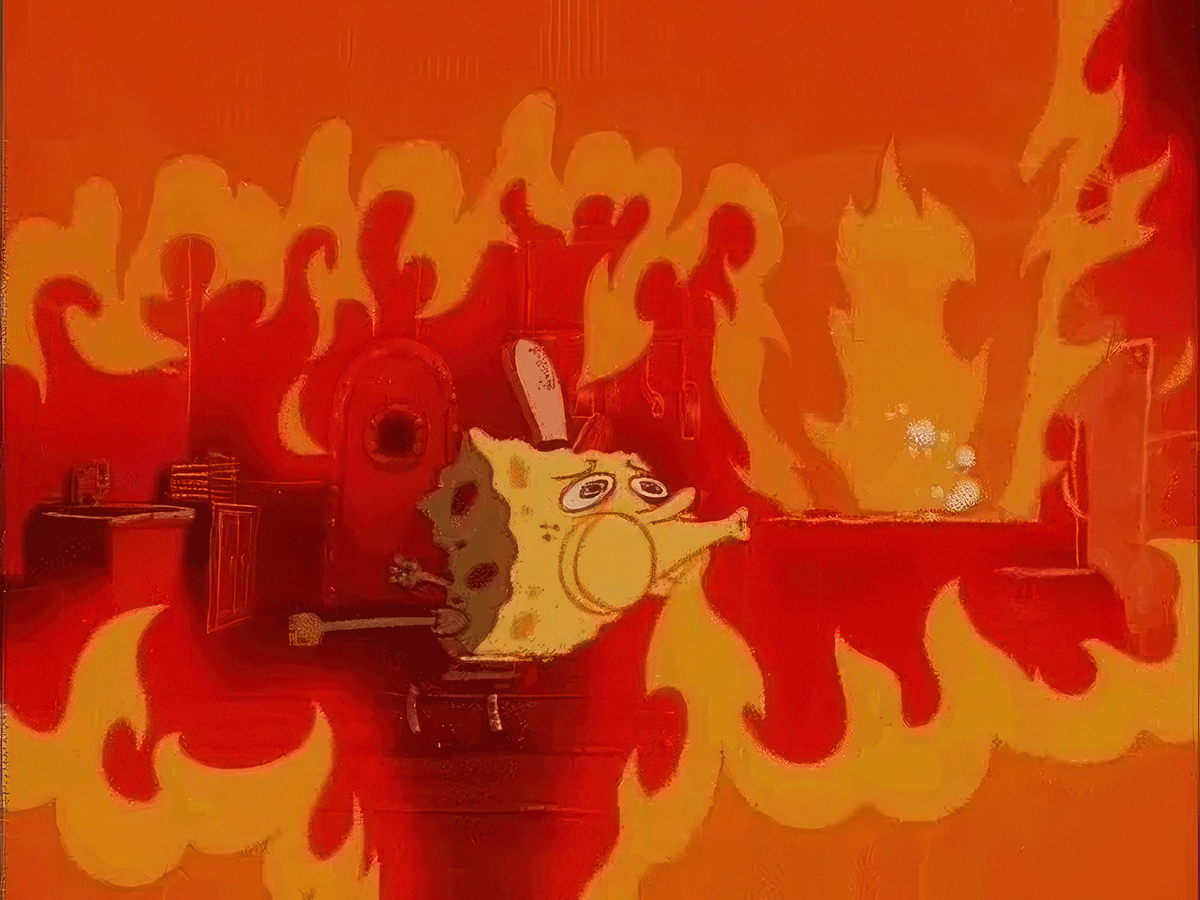

- Search for an animated GIF (example search term: chaos)

- Download AGIF

Ideally, the test video sample should be short and have a limited number of beats. The approach also works for animated GIFs.

- Get Scenes

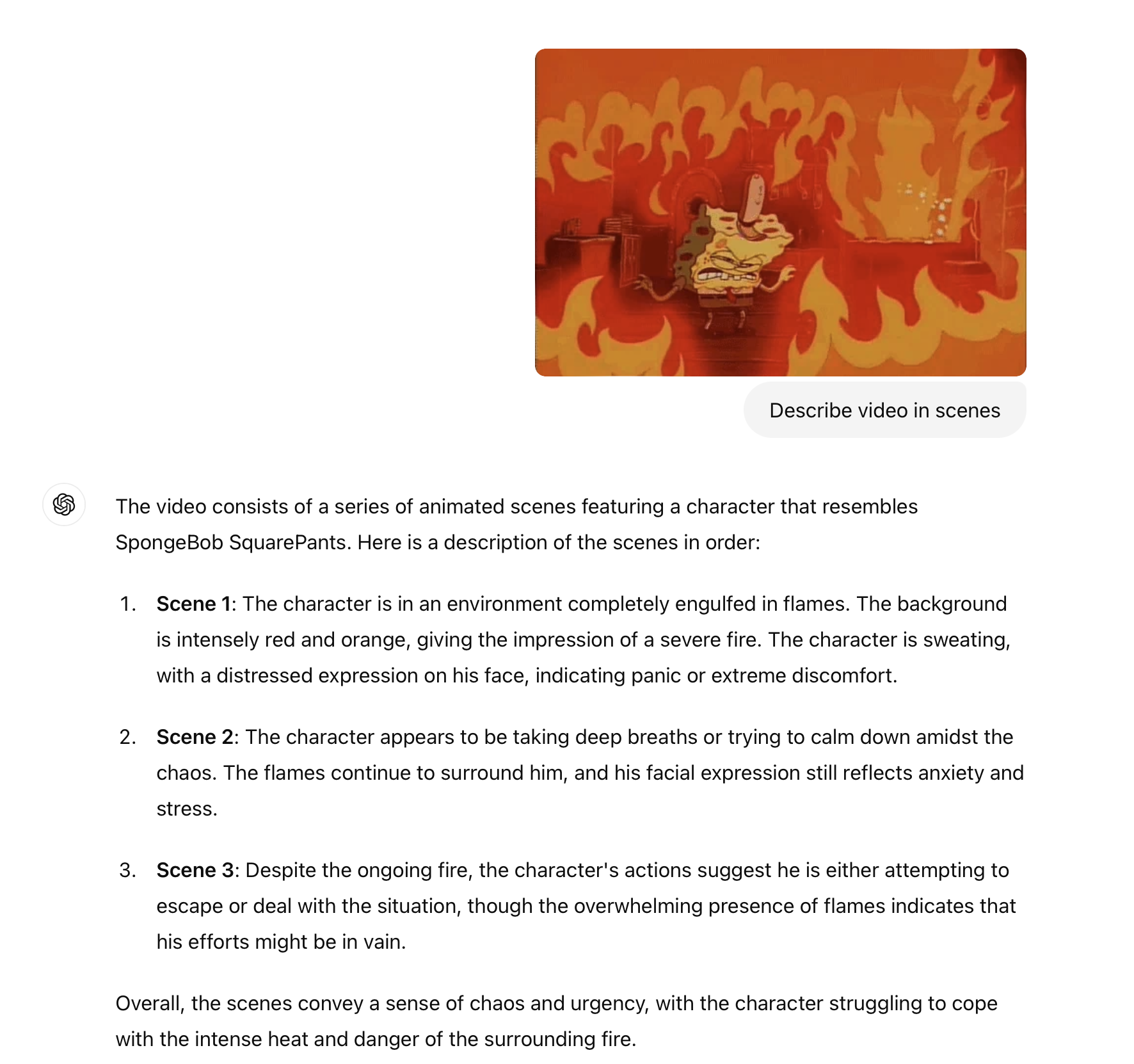

- Start a new GPT Chat

- Upload AGIF with „Describe videos in scenes“

- Turning the Descriptions into new images

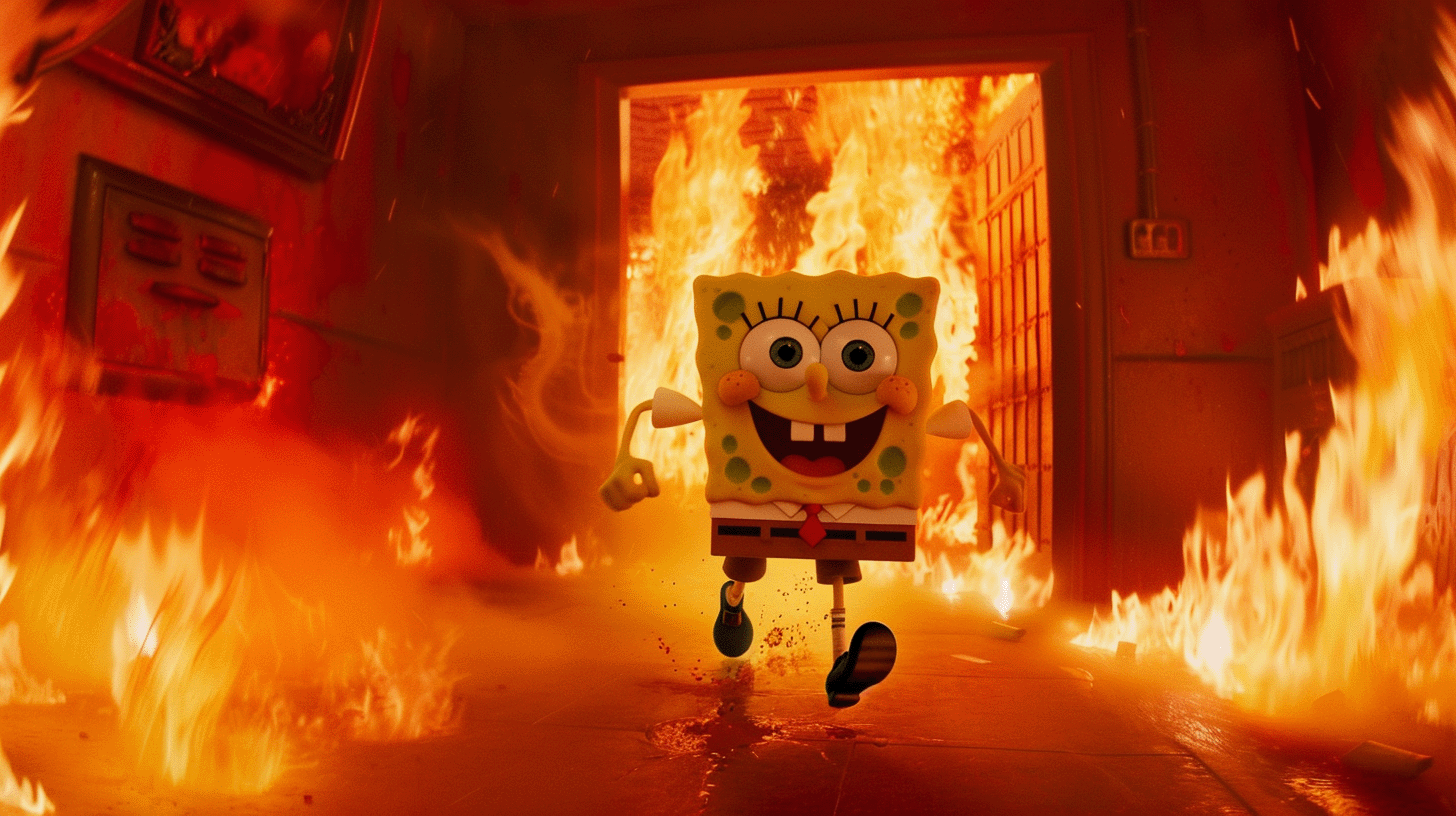

- Copy each description and use it as a text prompt (for example, with midjourney)

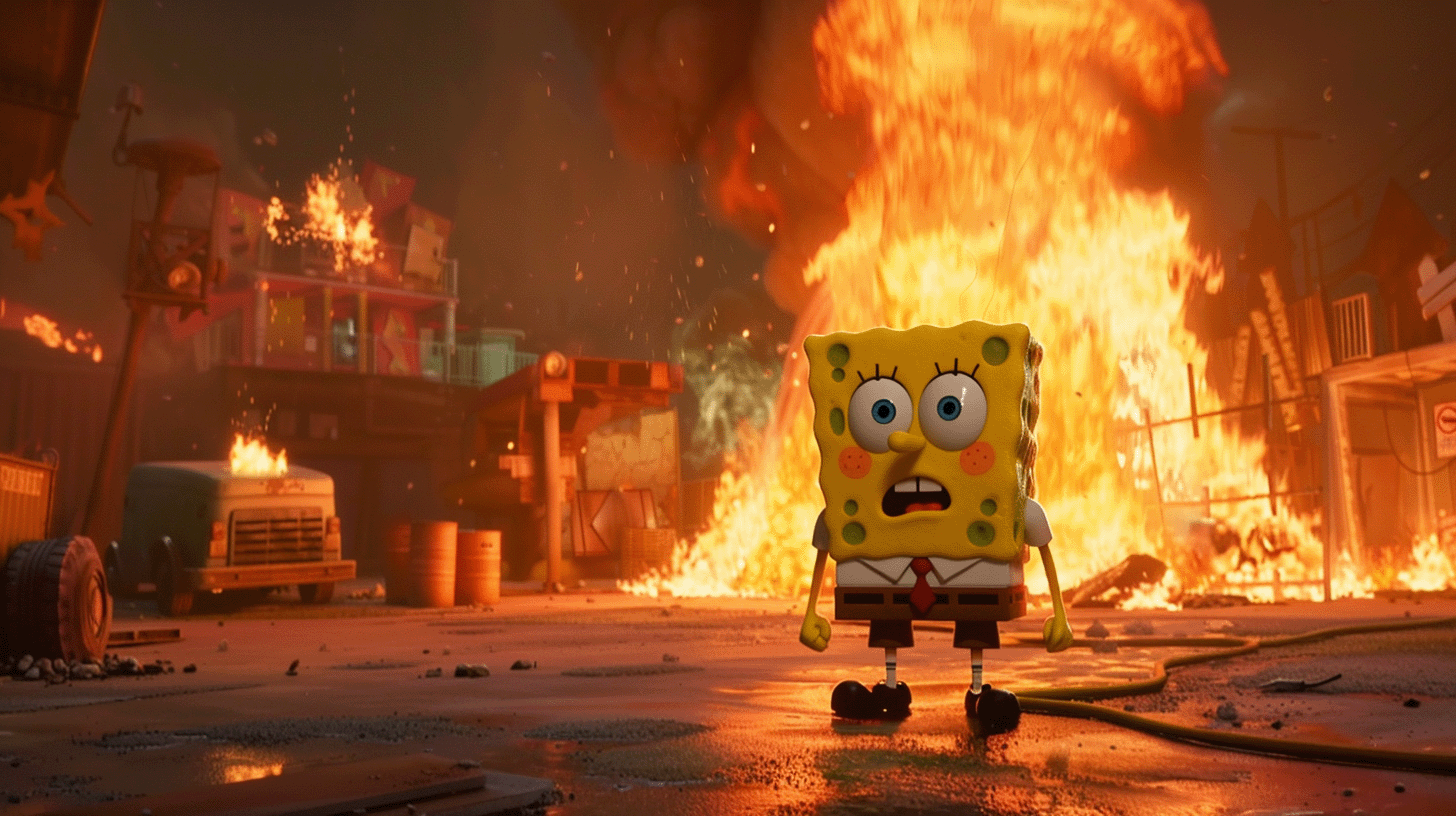

- Based on the example, there are three scenes.

- Turn every scene into a video clip

As a result, you will have a 12-second video generated from an animated GIF.

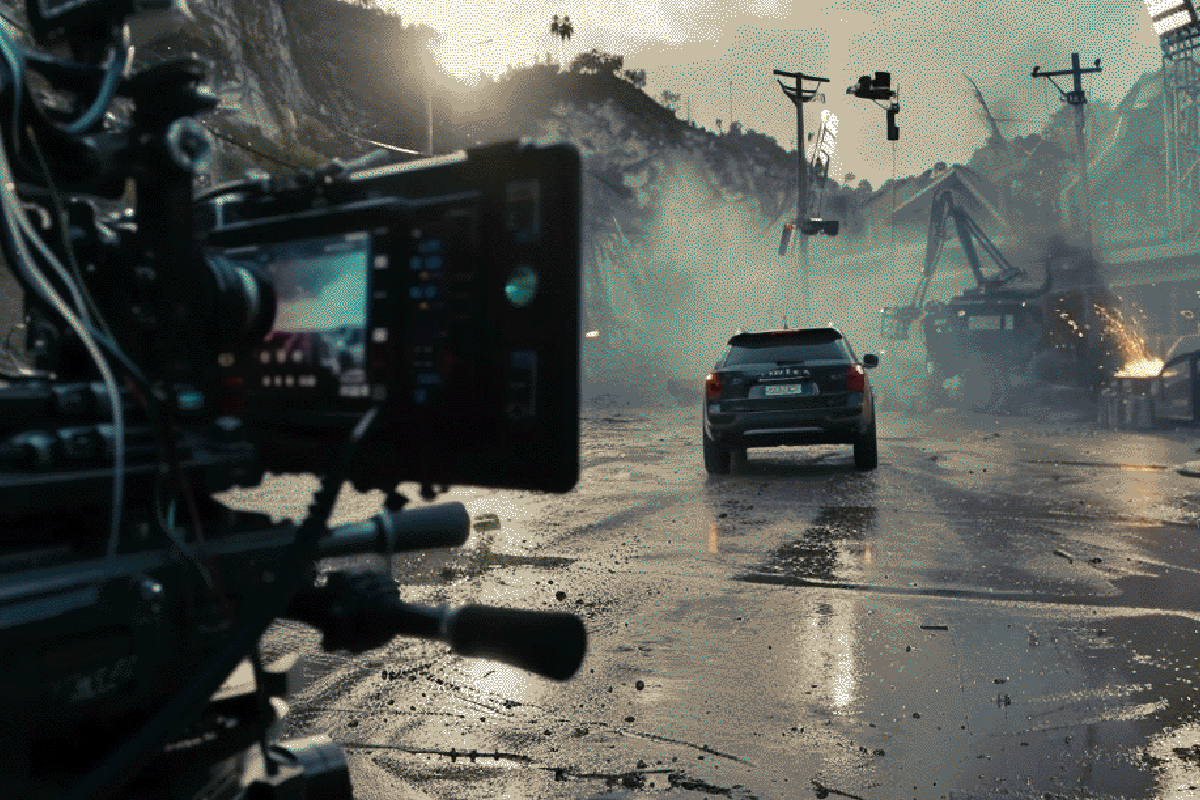

Another version of this video using Luma AI for the first shot.

Conclusion

By combining the multi-model capabilities of GPT4 or another LLM with an API, you can create an automated workflow to extract scenes and recreate the original movie.

The images will have a different style from the original animated GIF or video. However, it is possible to approximate the original style using style transfer or references.

The approach is also suitable for automated video analysis and classifications if the scene descriptions are combined with analytical NLP methods.

The video is derived from the three images, and the video clips tell a story shot-by-shot. It is not like the original video in style but by its story.

Sources:

- https://braintitan.medium.com/video-llava-better-understanding-and-processing-of-images-and-videos-c37e2c4fd69e

- https://www.linkedin.com/pulse/transforming-images-text-withpython-roman-orac-xpksf/

- https://replicate.com/yorickvp/llava-13b

- https://replicate.com/nateraw/video-llava

- https://community.openai.com/t/chatgpt-goes-multimodal-sound-and-vision-is-rolling-out-on-chatgpt/394501

- https://www.youtube.com/watch?v=IiyBo-qLDeM