Abstract Meaning Representation: Semantic Representation for Natural Language Understanding

- 6 minutes read - 1073 words

Table of Contents

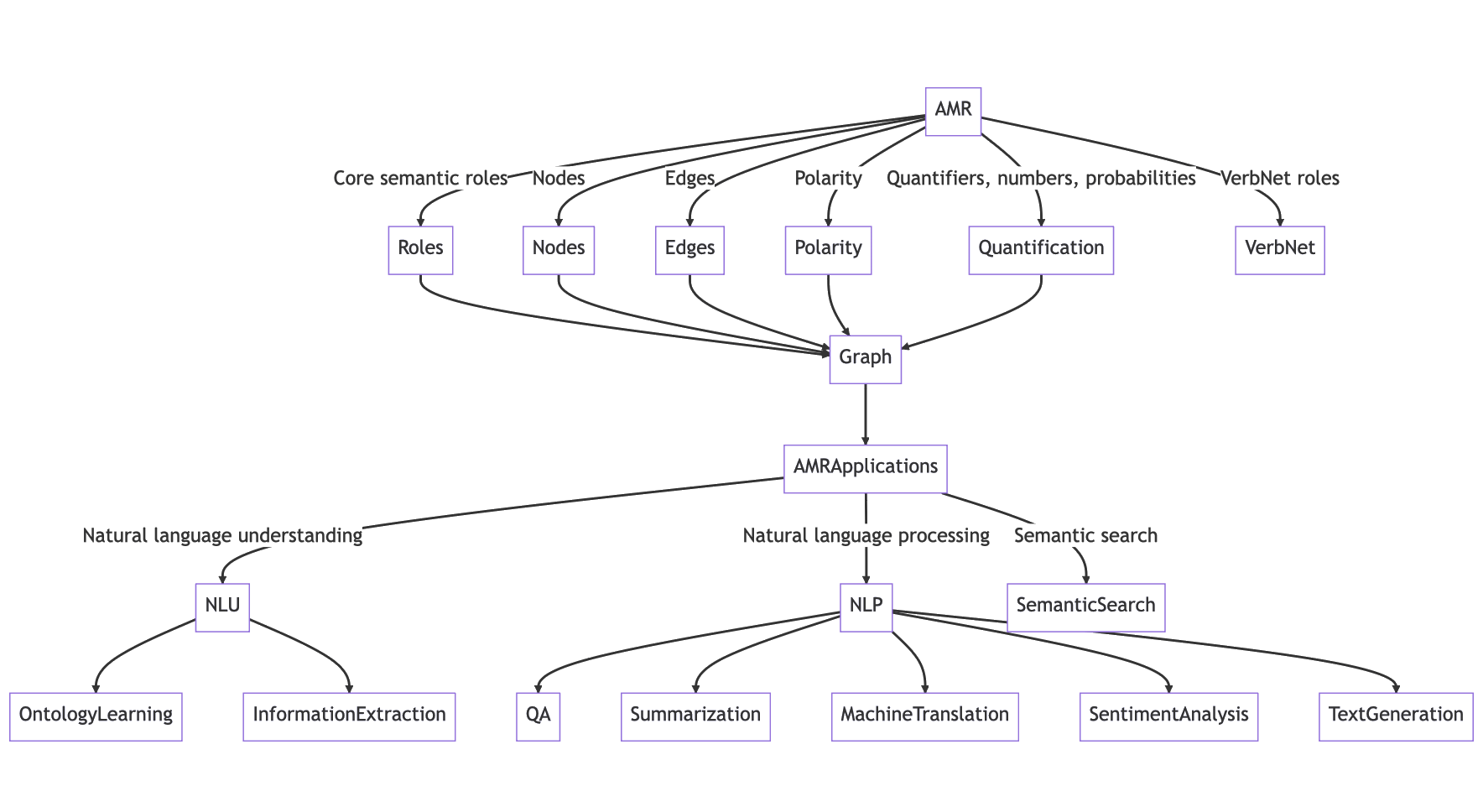

Abstract Meaning Representation (AMR) is a semantic representation of natural language that aims to capture the meaning of sentences, regardless of their form.

It represents sentences as rooted, directed graphs designed to enable natural language understanding for machines.

AMR serves as an intermediate Natural Language Processing (NLP) task, providing a structured way to parse and represent the meaning of sentences.

Key concepts in AMR

Several key concepts in AMR underpin its approach to semantic representation:

- Core semantic roles: AMR identifies the primary roles in a sentence, such as ARG0 (agent), ARG1 (patient), ARG2 (instrument), ARG3 (recipient), ARG4 (location), and others. These roles help to structure the relationship between concepts in a sentence.

- Nodes: Nodes represent concepts (or word senses) and named entities in an AMR graph. They correspond to the central ideas or components of a sentence.

- Edges: Edges represent relations between concepts. They connect nodes in the graph and encode aspects of meaning, such as semantic roles and modifiers.

- Polarity: Polarity indicates whether a concept is positive, negative, or neutral. In AMR, it can be used to represent sentiment or belief states.

- Quantifiers, numbers, and probabilities: AMR can represent quantifiers, numbers, and probabilities explicitly, allowing it to capture the nuance and uncertainty in a sentence.

- VerbNet roles: AMR uses VerbNet roles to represent verb predicates’ semantic roles. VerbNet is a lexical resource that organizes English verbs into classes based on their argument structures and shared semantics.

Example AMR

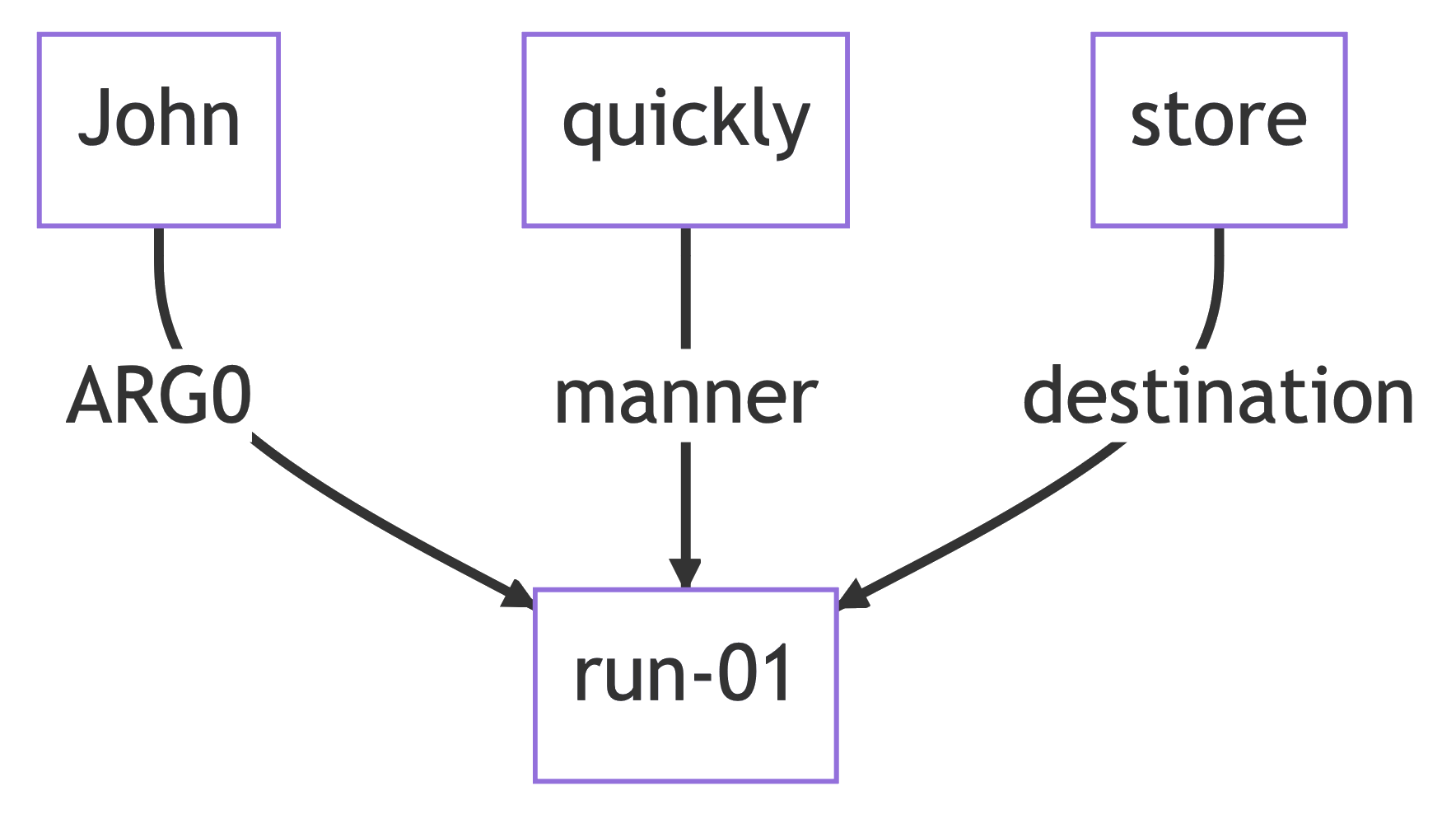

Consider the example sentence, “John quickly ran to the store.” An AMR graph for this sentence captures the key details:

- John is the ARG0 (agent) of the action,

- ran is the predicate representing the main action of the sentence,

- store is the ARG4 (location) where the action is directed, and

- quickly is a modifier indicating the manner of the action. The resulting AMR graph would look something like the following:

(r / run

:ARG0 (p / person :name (n / name :op1 "John"))

:ARG4 (s / store)

: manner (q / quick))

Visualisation of an AMR graph

Prompt (GPT-4):

abstract meaning representation for “John quickly ran to the store.”

Result: Abstract Meaning Representation (AMR) is a semantic representation language for natural language. The sentence “John quickly ran to the store” can be represented in AMR as follows:

(r / run-01

:ARG0 (j / John)

:manner (q / quickly)

:destination (s / store))

Here’s how this graph is structured:

(r / run-01) signifies the main action, which is ‘run’. The ‘-01’ means the most common sense of the verb ‘run’.

:ARG0 (j / John) shows that John is the one doing the running. ARG0 typically signifies the main participant in the action.

:manner (q / quickly) shows the manner in which the running happened, which is ‘quickly’.

:destination (s / store) shows the destination of the running, which is ‘store’.

This graph provides a compact representation of the sentence’s meaning, highlighting its core components and relationships.

Applications of AMR

AMR finds use in various NLP tasks and applications:

- Natural language understanding: AMR provides a structured representation of the meaning of a sentence. This makes it easier for machines to understand and process natural language.

- Semantic search: AMR can be used to construct more sophisticated queries in semantic search engines, leading to improved search results based on the meaning of the query.

- [Question answering]https://unimatrixz.com/topics/ai-text/nlp-tasks/advanced-nlp-tasks/question-answering/: With AMR, a question and its possible answers can be structured, enabling algorithms to compare and rank the answers based on their semantic similarity to the question.

- Summarization: AMR can help identify a text’s essential concepts and relationships, making it easier to generate informative summaries.

- Ontology learning: AMR can facilitate creating and refining ontologies (structured knowledge bases) by grounding concepts and relationships found in natural language into a formal representation.

Similar NLP Tasks

Some NLP tasks similar to Abstract Meaning Representation (AMR) include:

- Semantic parsing - Mapping natural language to a formal meaning representation. AMR is one approach to semantic parsing.

- Semantic role labeling - Identifying sentence constituents’ semantic roles (e.g., agent, patient, instrument). AMR incorporates semantic role information.

- Named Entity Recognition - Identifying and classifying entities (people, locations, organizations) in text. AMR represents entities and their types.

- Coreference resolution - Identifying when two expressions refer to the same entity. AMR represents coreferring expressions.

- Relation Extraction - Identifying and classifying relationships between entities or concepts. AMR represents many semantic relations between concepts and entities.

Generally, any task focused on extracting meaning, semantics, or knowledge from natural language text is similar to AMR. AMR aims to capture meaning through a graph-based representation, so other graph-based or structured meaning representations are similar. Some other similar formalisms include:

- Universal Conceptual Cognitive Annotation (UCCA)

- Semantic Dependency Parsing

- FrameNet

- ConceptNet

- Abstract Syntax Trees (ASTs)

In summary, the broad areas of semantic parsing, knowledge extraction, and graph-based meaning representations are most similar to the goals and purpose of AMR.

Current state and future directions

AMR parsing remains a challenging task. State-of-the-art neural models achieve a Smatch score of around 77%, meaning there is still room for improvement in the parsing process. Research continues to refine and expand upon AMR-based techniques.

Some recent work aims to extend AMR to new domains. Examples include using AMR representations for images, mathematics, and chemistry. These efforts could pave the way for applying AMR-based techniques to various interdisciplinary problems.

Another interesting direction for AMR is its potential use as an intermediate representation for grounded natural language generation (NLG). This would involve mapping from non-linguistic representations (e.g., the output of a computer vision system) to an AMR-like graph and then generating human-readable text describing the observed scene.

Conclusions

AMR is a powerful semantic representation for natural language understanding, providing a structured way to parse and represent the meaning of sentences. Its expressiveness and applicability to various NLP tasks make it both a valuable and essential tool for researchers and practitioners.

Despite the current challenges, the opportunities for future work with AMR are exciting, with potential applications spanning diverse domains.