Understanding Coreference Resolution in NLP and Its Importance

- 7 minutes read - 1486 words

Table of Contents

Coreference resolution is a crucial task in natural language processing (NLP) that identifies all the expressions or phrases in a text that refer to the same real-world entity. This process enhances human understanding of the text and helps the machine comprehend the content at a deeper semantic level.

The importance of coreference resolution in NLP cannot be overstated. Effective coreference resolution leads to a better understanding of the text, improving performance across various NLP tasks. Examples of coreference resolution include identifying pronouns and their referents, resolving noun phrases to the same entity, and recognizing when two phrases refer to the same event or process.

Approaches to Coreference Resolution

There are three main approaches to coreference resolution: rule-based, machine learning-based, and hybrid approaches.

Rule-based Approaches

Rule-based methods rely on a predefined set of linguistic rules and constraints to determine if two phrases refer to the same entity. These rules may include antecedent selection, agreement in number and gender, and selectional restrictions. For example, a simple rule-based approach may state that two noun phrases with the same head noun and modifiers may corefer.

One advantage of rule-based approaches is that they are transparent and interpretable, making it easier to debug and improve the system based on user feedback. However, these methods can be limited in capturing complex linguistic phenomena and may not generalize well to unseen data or different languages.

Machine Learning-based Approaches

Machine learning-based methods, on the other hand, leverage various machine learning techniques to learn the relationships between phrases and entities. These approaches can be classified into two categories: supervised and unsupervised learning.

In supervised learning, a model is trained on a labeled dataset, where the coreference relationships are annotated. The model then generalizes to make predictions on unseen data. Standard algorithms used in supervised coreference resolution include decision trees, support vector machines, and neural networks.

Unsupervised learning involves clustering phrases without guidance from labeled data. The goal is to group phrases that refer to the same entity based on similarities in their features. This approach may employ methods like hierarchical clustering or probabilistic models.

The primary advantage of machine learning-based methods is their ability to capture complex patterns in the data, potentially leading to better performance than rule-based approaches. However, they require annotated data for training, which can be expensive and time-consuming.

Hybrid Approaches

Hybrid approaches aim to combine the strengths of both rule-based and machine learning-based methods by incorporating linguistic knowledge and learned patterns to improve coreference resolution performance. These methods may involve layering rules on top of a machine-learning model or using rules to refine the clustering produced by an unsupervised method.

Challenges in Coreference Resolution

Several challenges in coreference resolution make it difficult for humans and machines. Some of these challenges include:

Ambiguity and Anaphora

One of the primary challenges in coreference resolution is the presence of ambiguous and anaphoric expressions. Anaphora uses pronouns or other expressions to refer to a previously-mentioned entity. For example, in the sentence “John visited his friend, and then he went home,” the pronoun “he” is an anaphoric expression referring to “John.” Identifying the correct antecedent for an anaphoric word can be challenging, mainly when the context is unclear.

Entity and Event Coreference

Another challenge is the need to resolve both entity and event coreference. Entity coreference refers to cases where two or more noun phrases refer to the same entity. In contrast, event coreference involves situations where different expressions represent the same event or process. Resolving both types of coreference is critical for a comprehensive understanding of a text.

Cross-document Coreference

Cross-document coreference resolution involves identifying coreferential relationships across multiple documents. This task is particularly challenging due to the large text volume and additional linguistic constructs and variations across different documents.

Evaluation Metrics

Several evaluation metrics are commonly used to assess the performance of coreference resolution systems. These include:

- Precision: The proportion of correct coreference relationships identified by the system out of all predicted relationships.

- Recall: The proportion of correct coreference relationships identified by the system out of all connections in the data.

- F1-score: The harmonic mean of precision and recall, providing a single value that balances the trade-off between precision and recall. A higher F1 score indicates better performance.

Applications of Coreference Resolution

Coreference resolution plays an essential role in many NLP tasks, including:

- Information Extraction: Extracting structured information from unstructured text requires identifying coreferential relationships to represent the content coherently.

- Machine Translation: Inaccurate coreference resolution can lead to errors in the translation of pronouns and other referring expressions.

- Question Answering: Answering questions about a text requires understanding the relationships between entities and events; coreference resolution is thus vital for real question answering.

- Sentiment Analysis: Sentiment analysis involves determining the opinion or emotion expressed in a text. Identifying coreference relationships helps to determine which entities are the targets of these opinions or emotions.

- Text Summarization: Generating a concise text summary requires identifying the main entities and events, which relies on accurate coreference resolution.

Prompt Example

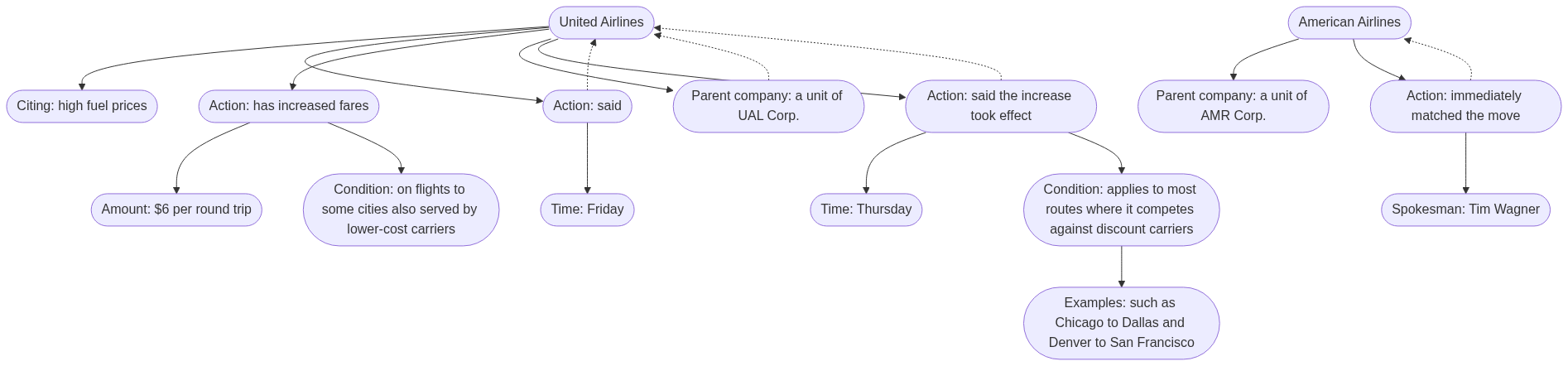

Prompt - as Graph:

coreference resolution for:

“Citing high fuel prices, United Airlines said Friday it has increased fares by $6 per round trip on flights to some cities also served by lower- cost carriers. American Airlines, a unit of AMR Corp., immediately matched the move, spokesman Tim Wagner said. United, a unit of UAL Corp., said the increase took effect Thursday and applies to most routes where it competes against discount carriers, such as Chicago to Dallas and Denver to San Francisco.” as graph

Prompt - as Table:

coreference resolution for:

“Citing high fuel prices, United Airlines said Friday it has increased fares by $6 per round trip on flights to some cities also served by lower- cost carriers. American Airlines, a unit of AMR Corp., immediately matched the move, spokesman Tim Wagner said. United, a unit of UAL Corp., said the increase took effect Thursday and applies to most routes where it competes against discount carriers, such as Chicago to Dallas and Denver to San Francisco.” as graph

Result: Below is a table showing the coreference resolution for the given text:

| Entity | Reference | Text |

|---|---|---|

| United Airlines | United | Citing high fuel prices, United Airlines said Friday it has increased fares by $6 per round trip on flights to some cities also served by lower-cost carriers. |

| a unit of UAL Corp. | United, a unit of UAL Corp., said the increase took effect Thursday and applies to most routes where it competes against discount carriers, such as Chicago to Dallas and Denver to San Francisco. | |

| American Airlines | American Airlines | American Airlines, a unit of AMR Corp., immediately matched the move, spokesman Tim Wagner said. |

| AMR Corp. | a unit of AMR Corp. | American Airlines, a unit of AMR Corp., immediately matched the move, spokesman Tim Wagner said. |

| Tim Wagner | spokesman Tim Wagner | American Airlines, a unit of AMR Corp., immediately matched the move, spokesman Tim Wagner said. |

| Lower-cost carriers | discount carriers | United, a unit of UAL Corp., said the increase took effect Thursday and applies to most routes where it competes against discount carriers, such as Chicago to Dallas and Denver to San Francisco. |

| lower-cost carriers | Citing high fuel prices, United Airlines said Friday it has increased fares by $6 per round trip on flights to some cities also served by lower-cost carriers. | |

| Chicago to Dallas | Chicago to Dallas | United, a unit of UAL Corp., said the increase took effect Thursday and applies to most routes where it competes against discount carriers, such as Chicago to Dallas and Denver to San Francisco. |

| Denver to San Francisco | Denver to San Francisco | United, a unit of UAL Corp., said the increase took effect Thursday and applies to most routes where it competes against discount carriers, such as Chicago to Dallas and Denver to San Francisco. |

Conclusion

Coreference resolution is a critical task in NLP that has seen significant progress over the past few years. Developing more advanced machine learning techniques and incorporating additional linguistic knowledge has led to improved performance in both rule-based and machine learning-based approaches.

However, several challenges remain in coreference resolution, such as ambiguity, anaphora, and cross-document coreference. Addressing these challenges will require continued research to develop more sophisticated models and better linguistic understanding.

As coreference resolution continues to improve, its applications in areas like information extraction, machine translation, and question answering will become increasingly accurate and valuable, further enhancing the ability of machines to understand and process human language.