Text-Completion and Fill-Mask Models in Natural Language Processing: Roles, Capabilities, and Limitations

- 5 minutes read - 948 words

Table of Contents

NLP has made significant progress with the development of Text-Completion and Fill-Mask tasks.

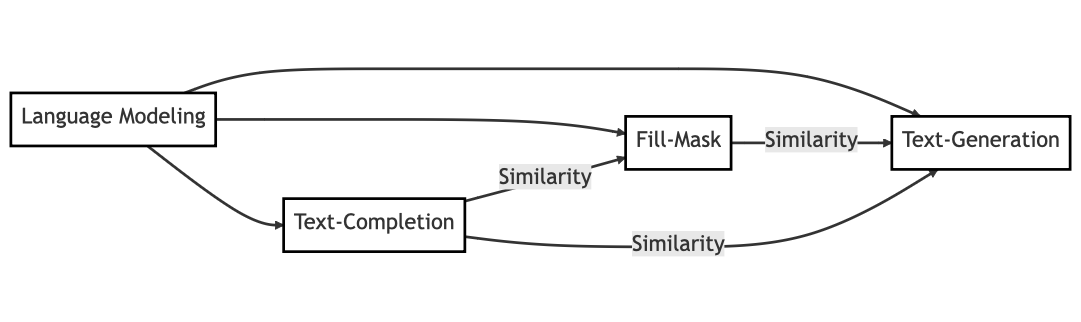

Text-Completion tasks involve predicting missing words or phrases in a given context and play a critical role in various NLP tasks. However, they can generate ambiguous or irrelevant content. In contrast, Fill-Mask tasks aim to predict the most appropriate words for masked positions within a text. Both tasks have strengths and limitations, and selecting a suitable task for a specific NLP task is crucial. AI-driven communication tools often utilize these tasks to provide various functionalities.

Ongoing research and exploration of these tasks’ capabilities will support the development of more sophisticated natural language understanding solutions.

Text-Completion

Definition and Purpose of Text-Completion Models

Text-completion models are trained to generate text by predicting and suggesting missing words or phrases in a given context.

They can generate natural-sounding sentences and contribute to NLP tasks such as content generation and translation. Text Generation depends on Text Completion.

Since When Text-Completion Models Exist in NLP

Text-completion models have existed since the early days of NLP, with newer models constantly being developed to improve the quality and coherence of the generated text.

Role of Text-Completion in Various NLP Tasks

Text-completion models play a critical role in machine translation, paraphrasing, summarization, and even chatbot applications by understanding and generating context-appropriate content.

A Few Examples Showing Text-Completion Capabilities

Given a sentence like “The weather today is ___,” a text-completion model might suggest words like “sunny” or “rainy.” In a sentence like “The capital of France is ___,” the model would generate “Paris” as the best completion.

Limitations of Text-Completion Models

Text-completion models can struggle with a lack of specificity and generate ambiguous or irrelevant content. They may not always be suitable for tasks requiring high precision or understanding of the input text.

Comparison of Fill-Mask Models to Text-Completion Models

Fill-mask works at the token level and is more focused on selecting the best word to complete a sentence instead of generating a long sequence of text. This leads to better accuracy in specific NLP tasks.

Comparing Text-Completion and Fill-Mask

Which NLP Tasks Rely on Text-Completion, and in Which Cases Is Fill-Mask Superior?

Text completion is better suited for tasks like content generation, while fill-mask models work well for sentiment analysis and error correction tasks. The choice between the two models depends on the specific NLP task.

Efficiency and Performance Trade-Offs Between the Two Models

Text-completion models require larger datasets and more computation, while fill-mask models are often more efficient in training and runtime.

Robustness and Adaptability of Both Models for Real-World Applications

Both models can show remarkable robustness and adaptability for real-world applications, though they may need continuous updates and fine-tuning to generalize well to new tasks or domains.

Example Scenarios Comparing the Results of Text-Completion and Fill-Mask Models

A fill-mask model can provide more accurate results in a sentiment analysis task, while a text-completion model may struggle to generate context-specific insights.

Mutual Dependencies Among NLP Tasks

How Text-Completion and Fill-Mask Models Can Be Combined to Solve Complex NLP Problems

Both models can be used to generate more accurate and contextually relevant content, especially when dealing with complex NLP problems requiring diverse skill sets.

Co-Existence of These Models in AI-Driven Communication Tools and Platforms

AI-driven communication tools often utilize text-completion and fill-mask models to provide various functionalities like chat automation, summarization, and sentiment analysis.

Future Prospects and Challenges for Interoperability of Text-Completion and Fill-Mask Models

Interoperability between models will be crucial for NLP advancement, requiring ongoing research and collaboration between researchers and practitioners.

Comparing Fill-Mask and Text Completion

Fill-Mask

Fill-mask is a task where the model is given a sentence or a sequence of text with one or more masked tokens (words). The model aims to predict the most appropriate word(s) to fill the masked positions. This task is commonly used for pretraining and fine-tuning language models, such as BERT, designed to understand contextual relationships between words.

Example: Input: “I have a pet [MASK].” Output: “I have a pet dog.”

Text Completion

Text completion, on the other hand, involves generating a coherent and contextually relevant continuation of a given text prompt. This task focuses on generating new content rather than predicting specific missing words. Models like GPT-3, which are autoregressive, excel at text completion tasks.

Example: Input: “Once upon a time in a small village,” Output: “Once upon a time in a small village, there lived a kind old woman who was well-loved by her neighbors. She spent her days tending to her garden and taking care of the animals in the village.”

Conclusions

Text-completion and fill-mask models play vital roles in various NLP tasks, each with strengths and limitations. Understanding these differences is crucial when selecting a suitable model for a specific job.

As the field of NLP continues to advance, ongoing research and exploration of these models’ capabilities and interoperability will support the development of more sophisticated natural language understanding solutions.

In summary, fill-mask tasks focus on predicting the most appropriate word(s) for masked positions within a given text. In contrast, text completion tasks involve generating a continuation of a given text prompt. Both jobs play essential roles in training and evaluating the performance of NLP models.