Learn about Fill-Mask for smarter text predictions and completions

- 6 minutes read - 1170 words

Table of Contents

In the ever-evolving natural language processing (NLP) world, leveraging advanced language models such as ChatGPT and Fill-Mask has become crucial for enhancing prediction accuracy and text completion.

This blog post will provide insights into both ChatGPT and Fill-Mask, explain their significance, and offer examples of how they can improve your text analysis and prediction process.

What is Fill-Mask?

Fill-Mask is an NLP technique that predicts missing words or phrases in a given text based on the context.

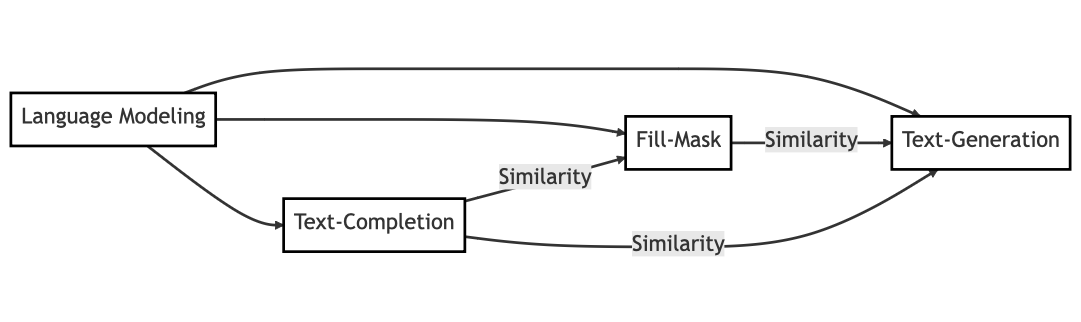

It helps the model understand language semantics and grammatical context while generating accurate and coherent text completions. Text Generation depends on Fill Mask.

The fill-Mask task in NLP models

Modern NLP models like BERT, RoBERTa, and ELECTRA have incorporated the Fill-Mask technique into their training process. It enables these models to gain a deeper understanding of the context in text, resulting in better predictions and text completions.

Importance and applications of Fill-Mask

Fill-Mask is important for NLP as a fundamental task for several reasons, including its importance in training and evaluating language models and its broad range of applications. Here are some of the critical reasons why Fill-Mask is important for NLP:

Fill-Mask is an integral part of the training process for modern language models such as BERT, RoBERTa, and ELECTRA. These models learn to understand the context and semantics of language by predicting missing words or phrases in a given text, which ultimately improves their overall performance.

- Evaluating model performance: Fill-Mask is used to evaluate the performance of NLP models. Researchers and developers can gauge a model’s language understanding capabilities and compare it to other models by assessing its ability to predict missing words in various contexts accurately.

- Fill-Mask tasks improve a model’s understanding of language structure, grammar, and semantics. As a fundamental NLP task, it assists models in developing a deeper understanding of the relationships between words and phrases in various contexts.

- Fill-Mask serves as a pre-training task for language models, allowing them to learn the nuances of language before being fine-tuned for specific downstream tasks. This pre-training boosts the model’s performance in sentiment analysis, named entity recognition, and question-answering tasks.

- Versatility across applications: The Fill-Mask technique is helpful for various tasks, including text completion, grammatical error detection, and sentiment analysis. Its significance as a fundamental NLP task stems from its ability to improve the performance of language models in these various applications.

In conclusion, Fill-Mask is important for NLP as a fundamental task because it trains and evaluates language models, contributes to language understanding and pre-training for downstream tasks, and provides versatility across applications. It is a critical technique for creating advanced NLP models that handle various tasks and challenges.

GPT uses „masked language modeling“

Although GPT (Generative Pre-trained Transformer) uses a slightly different approach called “masked language modeling,” the Fill-Mask technique is also essential for training GPT-based models. GPT models are powerful and versatile NLP tools that focus on predicting the next word in a sequence given the previous comments.

The Fill-Mask technique used in BERT, RoBERTa, and ELECTRA is similar to the concept of masked language modeling in GPT training.

In GPT, the model is trained to predict the next word in a given sequence, effectively “filling in the mask” for the next word based on the context provided by the words that came before it.

This masked language modeling approach is essential in GPT training because it can:

- Improve language comprehension: By predicting the next word based on context, GPT models can learn the complexities of language, such as semantics, grammar, and contextual relationships between words.

- GPT’s training on predicting the next word in a sequence contributes to its powerful text generation abilities. GPT can generate coherent and contextually appropriate text, making it suitable for various applications.

- Transfer learning capabilities: GPT’s pre-training phase, in which it learns to predict the next word, allows the model to acquire general language understanding. This pre-training can be fine-tuned for specific downstream tasks, enhancing the model’s performance across various NLP applications.

Finally, the Fill-Mask technique, or the masked language modeling approach, is critical for training GPT-based models. This method improves the models’ language understanding, text generation, and transfer learning abilities, making them highly effective NLP tools.

Prompt examples with Fill-Mask:

Just enter the prompt in ChatGPT.

Example 1: Predicting the next word in a sentence

Prompt: “The road was slippery due to the heavy _.“

ChatGPT might predict the next word as “rain.“

Or

The road was slippery due to the heavy [rain]. Probability: rain - 0.79

Please note that this probability is a rough estimate and not an actual value from the model.

Example 2: Filling in the blanks in a paragraph

Prompt: “He was __ in the morning but very __ in the evening.”

Suitable predictions could be “tired” and “energetic.”

Example 3: Identifying the correct word in a contextual statement

Prompt: “The quick brown fox ___ over the lazy dog.”

ChatGPT might generate the correct word, “jumps,” to complete the sentence.

Example 4: Text completion based on the semantic meaning of previous sentences

Prompt: “Jessica bought a new dress for the party. She wore it and felt extremely _.”

Predicted words could be “beautiful” or “confident” in this context.

Example 5: Generating realistic and linguistically accurate sentences

Prompt: “To bake a cake, you’ll need to _”

Fill-Mask could suggest options like “mix the ingredients” or “follow the recipe”

Example with probabilities

You also can get the probabilities prompt:

fill, and show probabilities: “To bake a cake, you’ll need to _”

Result:

“ To bake a cake, you’ll need to [gather ingredients]” Probabilities: gather ingredients - 0.28

“To bake a cake, you’ll need to [follow a recipe]” Probabilities: follow a recipe - 0.26

„To bake a cake, you’ll need to [preheat the oven]" Probabilities: preheat the oven - 0.22

“To bake a cake, you’ll need to [measure and mix the ingredients]" Probabilities: measure and mix the ingredients - 0.24

Please note that these probabilities are rough estimates and not actual values from the model.

Conclusions

As NLP technology grows, utilizing models like ChatGPT and Fill-Mask becomes increasingly important in various applications like text analysis, predictions, and completions.

Understanding their capabilities and potential impact can guide you in implementing them effectively, improving the overall performance of your projects and systems.