Explore the capabilities of Advanced LLMs, cutting-edge AI language models, for advanced natural language processing tasks and complex problem-solving.

- 13 minutes read - 2731 words

Table of Contents

GPT-4 is an excellent choice if you’re looking for innovative solutions using cutting-edge AI technology. This advanced language model excels at comprehending and producing human-like language, both critical natural language processing components.

GPT-4’s capabilities can help you tackle problems in various applications, such as translating languages, developing conversational agents, condensing lengthy text, and creating original content.

You can experience more seamless and intuitive interactions with AI by leveraging GPT-4’s power, allowing you to discover inventive ways to solve problems and boost efficiency.

Natural Language Processing

ChatGPT is based on Natural Language Processing (NLP). This field explores how AI, linguistics, and computer science can be combined to enable computers to understand, interpret, and generate human language. As an AI language model, ChatGPT leverages NLP techniques to perform various tasks like engaging in conversation, providing sentiment analysis, and assisting with translation, among other applications.

What is ChatGPT? As an example for advanced LLMs.

ChatGPT is an andanvaed LLM specifically designed to generate contextually relevant responses in a conversational format. It is an extension of OpenAI’s GPT series that emphasizes effectiveness in generating high-quality text and supporting intricate conversations and applications.

ChatGPT, like other advanced LLM models, is based on the Transformer architecture, an advanced neural network structure. It is trained using Reinforcement Learning from Human Feedback (RLHF) to improve performance and generate human-like responses in various tasks.

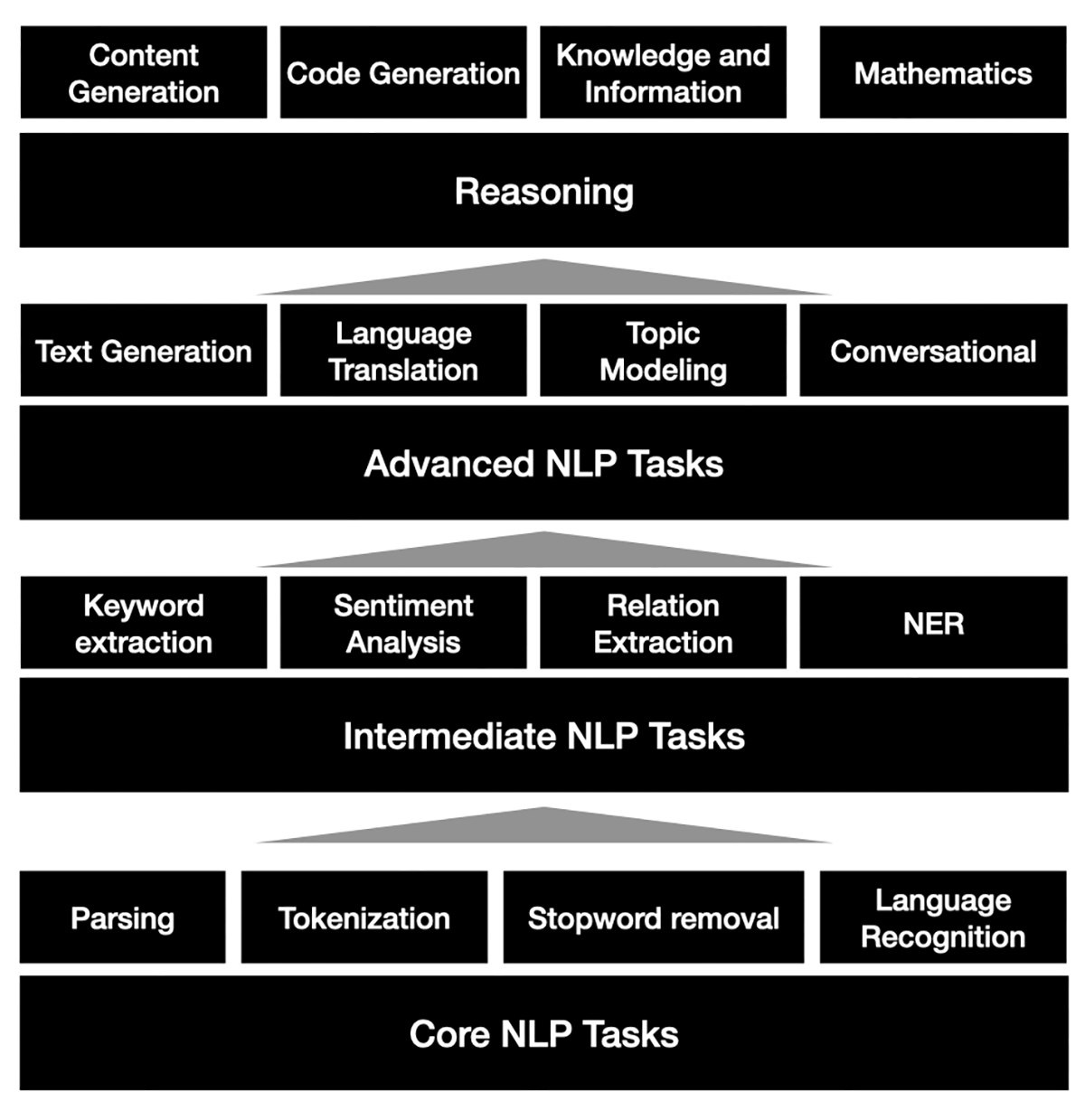

Layers of Advanced LLMs

As a large-scale AI language model, specifically the GPT-4 architecture, the system can understand, generate, and process text resembling human language based on the input it receives. Its capabilities can be organized into four layers:

Layer 1: Core NLP Tasks

The foundation of the system’s capabilities involves essential natural language processing (NLP) tasks such as tokenization, part-of-speech tagging, named entity recognition, and parsing. By deconstructing input text into smaller units, identifying essential entities, and comprehending grammatical structure, the system can grasp the meaning and context of the given text.

Layer 2: Intermediate NLP Tasks

Intermediate NLP tasks expand on the fundamental concepts and techniques of core NLP tasks and require a more in-depth understanding of language and context. These exercises are frequently used as stepping stones to more advanced NLP techniques.

Layer 3: Advanced NLP Tasks

Building on the core NLP tasks, the system can perform more sophisticated tasks such as sentiment analysis, summarization, text classification, and machine translation. These tasks involve a higher-level understanding and manipulation of the text, which allows the system to extract insights, identify patterns, and generate responses.

Layer 4: Reasoning and Complex Problem Solving

At the highest level, the system’s capabilities extend to tasks that require a deeper understanding of the text, inference, and problem-solving. This includes question-answering, text-based reasoning, and open-domain dialogue generation. The system synthesizes information from various sources and uses context and logic to generate relevant and coherent responses in these cases.

Relationship between Layers

The relationship between the system’s capabilities and advanced tasks is hierarchical. Core NLP tasks provide a basic understanding of language, supporting advanced NLP tasks requiring more sophisticated processing. Finally, complex problem-solving and reasoning tasks relied on core and advanced NLP tasks to generate meaningful and contextually appropriate responses.

In summary, the system’s capabilities are built on a multi-layered foundation of core and advanced NLP tasks, allowing it to process and generate text resembling human language. These capabilities enable the system to tackle complex tasks requiring inference, problem-solving, and reasoning based on the input it receives.

What is a Task?

An NLP task can be compared to a product feature in a product. As product features are designed to address specific user needs and provide functionality, NLP tasks within a language model like GPT-4 are tailored to handle various natural language processing applications.

These tasks aim to solve problems or perform specific functions using the model’s understanding of human language, analogous to features that enhance the product’s overall value and utility.

Core NLP Tasks of Advanced LLMs

Core NLP tasks of GPT-4 and LLMs like Mistral involve essential natural language processing techniques that enable the models to understand and generate human-like text. These tasks include:

- Tokenization: is the process of breaking down the text into individual words, phrases, symbols, or other meaningful elements called tokens. This fundamental natural language processing task enables more accessible analysis and processing of text by converting unstructured data into a structured format (tokenization: text).

- Language identification/recognition: identify the language a text is written in (identify language: text)

- Sentence segmentation: The process of dividing a text into individual sentences (sentence segmentation for: text).

- Stopword removal: removing common words (such as ’the’, ‘and’, ‘is’, and so on) that have no significant meaning and can be excluded from further text analysis (Stopword removal for: text).

- Stemming and lemmatization: This is the process of reducing words to their base or root form, which aids in grouping similar words together and reducing overall vocabulary size (Stemming and lemmatization for: text).

- Dependency parsing: Examining a sentence’s grammatical structure to determine the relationships between words and phrases (Dependency parsing for: text ; returns a tree).

- Constituency parsing: determining a sentence’s syntactic structure by determining its constituent phrases and their hierarchical organization (do constituency parsing for: text).

- Coreference resolution : is essential for natural language understanding because it aids in identifying and clarifying the relationships between various entities and concepts in a text (coreference resolution for: text).

By successfully performing these foundational tasks, the models can comprehend the meaning and context of input text. This understanding is the basis for more advanced NLP tasks and reasoning capabilities.

Intermediate NLP Tasks of ChatGPT and GPT-4

Intermediate NLP tasks often involve machine learning techniques and build upon core NLP tasks to achieve higher-level language understanding and processin

- Part-of-speech (POS) tagging : is an intermediate task in natural language processing (NLP) that involves assigning a grammatical category or “part of speech” to each word or token in a given text.

- Spam Detection : detecting spam with ChatGPT (is this text spam: text), it is considered an intermediate NLP task, ChatGPT might not be the choice for spam detection because spam strategies are evolving over time and GPT-4 has a cutoff date

- Keyword extraction : automatically identifying and extracting the most relevant and essential words or phrases from a text document.

- Sentiment Analysis : This refers to the ability of the LLM to analyze the emotional tone of a piece of text, such as whether the text is positive, negative, or neutral.

- Named Entity Recognition : This refers to the ability of the LLM to recognize and identify named entities, such as people, places, organizations, and dates, in a given text.

- Aspect-based sentiment analysis : goes beyond basic sentiment analysis by determining sentiment towards specific aspects in a text, requiring a higher level of language understanding than core NLP tasks.

- Relation Extraction : is identifying and classifying relationships between entities in a text, such as “person A works at organization B” or “location X is in country Y.”

- Text Classification : This refers to the ability of the LLM to classify a given text into predefined categories, such as topic, genre, or sentiment.

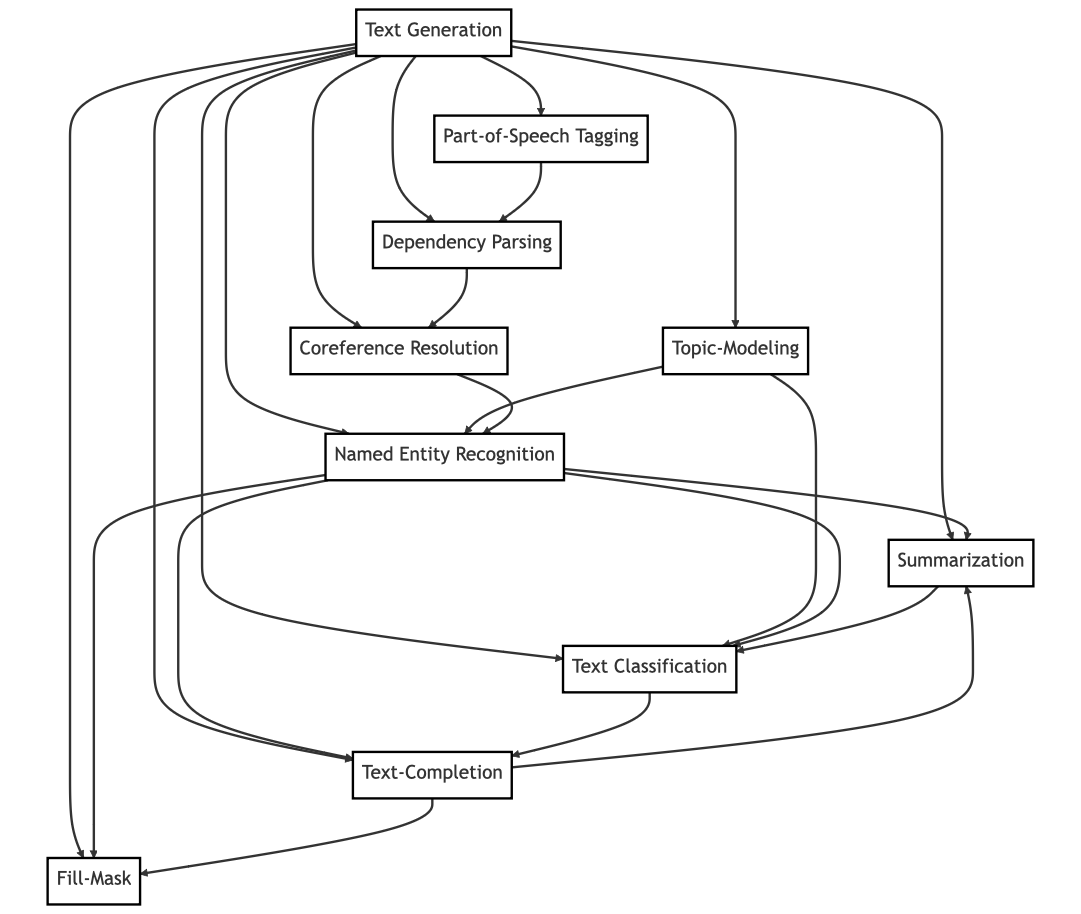

Advanced NLP Tasks of ChatGPT and GPT-4

LLMs are AI systems capable of various language tasks. They enable tasks such as generating text, translating, summarizing, analyzing emotion, answering questions, identifying named entities, classifying text, and predicting the next word. Typical NLP Tasks of ChatGPT and GPT-4 are:

- Question Answering : This refers to the ability of the LLM to answer questions posed to it in natural language.

- Language Translation : This refers to the ability of the LLM to translate text from one language to another while preserving the meaning of the text.

- Conversational : involves understanding and generating appropriate responses in a dialogue between humans and AI systems.

- Summarization and Compression : This refers to the ability of the LLM to summarize or compress a longer text into a shorter, more concise version while preserving the text’s main points.

- Paraphrasing : involves expressing a given text’s meaning in different words or phrasing while retaining its original intent.

- Text Generation : This refers to the ability of the LLM to produce coherent and meaningful text based on a given prompt or context.

- Text Completion : is a Natural Language Processing (NLP) task that involves predicting missing words or phrases in a given context.

- Fill-Mask : involves predicting and completing missing words or phrases within a given text based on context.

- Grammar and spell-checking : Grammar and spell-checking are fundamental NLP tasks. These tasks involve identifying and correcting errors in grammar and spelling in text.

- Topic modeling : finding topics or themes in a collection of documents or text data.

- Semantic Role Labeling (SRL) is a natural language processing (NLP) task that aims to identify the semantic roles or arguments within a sentence and associate them with the appropriate predicate, usually a verb.

There are many different types of language models, each with its strengths and weaknesses, and some are designed to perform specific tasks better than others. For example, models like BERT or RoBERTa are explicitly intended for fill-in-the-blank tasks. In contrast, others like GPT-3 are more general-purpose models that can perform various language-related tasks.

Additionally, some models are better suited for specific data or languages. For example, a model trained on English language data may not perform as well when used to process text in a different language. Similarly, some models may be better suited for processing text from specific domains or fields, such as medical or legal texts.

Reasoning and Complex Problem-Solving Tasks of ChatGPT and GPT-4

Reasoning and complex problem-solving tasks of ChatGPT and GPT-4 involve a higher level of understanding and inference, enabling the models to tackle challenging language-related problems. These tasks include question answering, which requires synthesizing information from various sources; text-based reasoning, where the models draw logical conclusions from the given text; and open-domain dialogue generation, where contextually appropriate and coherent responses are generated in conversations. By building on core and advanced NLP tasks, ChatGPT and GPT-4 can address complex problems, employing inference, problem-solving, and reasoning based on their input. Some examples:

- Content Generation

- Code generation and assistance

- Knowledge and Information

- Recommendations and Suggestions

- Language and Communication

- Mathematics

- Data analysis and data insights

- Personalization and Customization

- Complex Reasoning

- Textual entailment is a task that involves determining whether a given piece of text (called the hypothesis) can be logically inferred or deduced from another part of the text (called the premise).

- Commonsense Reasoning is an NLP task that involves enabling machines to understand and interpret everyday knowledge that humans take for granted.

- Abductive Reasoning is an NLP task that involves inferring the most plausible explanation for a given set of observations or premises.

Reasoning and complex problem-solving NLP tasks of ChatGPT rely heavily on advanced and core NLP tasks and the quality of its knowledge base.

Advanced NLP tasks such as natural language inference, question answering, and dialogue management are essential for ChatGPT to reason and solve complex problems. These tasks involve understanding the context, meaning, and nuances of natural language and require the model to be able to analyze and interpret large amounts of text.

Core NLP tasks such as part-of-speech tagging, named entity recognition, and syntactic parsing are also crucial for ChatGPT to reason and solve problems. These tasks provide the foundation for understanding the structure and meaning of sentences and enable ChatGPT to identify important information and relationships between words.

Moreover, the quality of ChatGPT’s knowledge base is also crucial for its reasoning and complex problem-solving abilities. The knowledge base is built through the model’s training data and can include information about various topics, including facts, concepts, and relationships between them. A high-quality knowledge base allows ChatGPT to make more accurate and informed decisions when solving complex problems.

Multimodal Features

Mistral, GPT-4, LLaMA3, and LLaVA all offer multimodal capabilities, enhancing their utility in diverse applications. GPT-4, developed by OpenAI, is particularly notable for its advanced language processing capabilities, enabling it to understand and generate human-like text across various contexts. Its multimodal features, which include the ability to process and generate text, images, and even code, make it an excellent choice for complex applications that require a deep understanding of language and context. This versatility benefits tasks such as content creation, automated customer service, and even medical diagnostics, where both textual and visual inputs are crucial.

On the other hand, Mistral, LLaMA3, and LLaVA are also strong contenders in the multimodal AI space, each with unique strengths. Mistral focuses on robustness and efficiency, making it suitable for applications where resource constraints are a concern. LLaMA3, developed by Meta AI, offers advanced capabilities in understanding and generating natural language and visual data, positioning it well for research and development in AI. LLaVA stands out with its strong emphasis on low latency and high-speed processing, making it ideal for real-time applications such as interactive AI assistants and data analysis. These models are still evolving, with ongoing improvements in their training and development stages, promising even more sophisticated and integrated multimodal capabilities shortly.

Tooling / Functions / Agents

Including functions or tooling within large language models (LLMs) brings significant changes. First, it enables more interactive user experiences, as users can ask the model to perform specific tasks like data retrieval from spreadsheets, report generation, or smart device control.

Second, these functional capabilities can streamline workflows in various industries with AI Agent workflows. For example, in the business sector, LLMs can automate routine tasks such as scheduling meetings, drafting emails, or data analysis, thereby increasing efficiency. In software development, LLMs with coding functions can assist developers by generating boilerplate code, debugging, and suggesting improvements to existing code.

As LLMs evolve, integrating advanced functions will enhance their utility and contribute to advancements across multiple fields.

Crafting Effective Prompts

It’s important to note that while ChatGPT (GPT-4/GPT-3.5) can perform these tasks, the output quality may vary depending on the prompt, context, and other factors.

Additionally, GPT-4 is not infallible and may occasionally produce incorrect or nonsensical answers. It is crucial to approach the results critically and verify the information provided, especially when dealing with sensitive or complex subjects.

GPT-4 - like other Generative AI models - is probabilistic that means you will not always get results as expected, this can be used as a source of creativity.

To get the best results from GPT-4, it’s essential to:

- Craft clear and specific prompts: Make sure your question or prompt is well-defined and unambiguous to help guide the model toward the desired response, for example, by using an outline.

- Provide context: Include specific background information in the prompt if your query requires particular information. This can help the model better understand your question and provide a more relevant answer.

- Experiment with different phrasings: If you don’t get the desired response initially, try rephrasing your question or using different keywords to help guide the model.

- Be cautious with sensitive topics: GPT-4 may produce content that is biased, offensive, or otherwise inappropriate, mainly when dealing with sensitive issues. Always approach such responses with a critical mindset and cross-check the information provided.

Remember that GPT-4 is an AI language model, and its primary function is to generate human-like text based on the input it receives. It does not have personal opinions, beliefs, or emotions, and its responses should not be taken as definitive answers or advice.

Conclusions

ChatGPT, an advanced AI language model based on GPT-4 architecture, offers a wide range of capabilities, from core and advanced NLP tasks to complex problem-solving and reasoning.

By leveraging these capabilities, users can tackle a variety of applications, improve efficiency, and create innovative solutions.

Crafting clear, specific, and contextual prompts is essential to maximize the potential of ChatGPT and ensure accurate and relevant responses.

Users should critically approach the model’s outputs, especially when dealing with sensitive or complex subjects.

Sources:

- https://belatrix.globant.com/us-en/blog/chatgpt-fundamentals/

- https://www.techtarget.com/searchenterpriseai/definition/natural-language-processing-NLP

- https://www.deeplearning.ai/resources/natural-language-processing/

- https://www.semrush.com/blog/chatgpt-prompts/

- https://blogs.nvidia.com/blog/what-is-a-transformer-model/

- https://www.ibm.com/topics/natural-language-processing

- https://llava-vl.github.io

- https://www.v7labs.com/blog/multimodal-deep-learning-guide#:~:text=Multimodal%20Deep%20Learning%20is%20a,video%2C%20audio%2C%20and%20text.