Stochastic parrots, take to the skies!

- 5 minutes read - 1042 words

Table of Contents

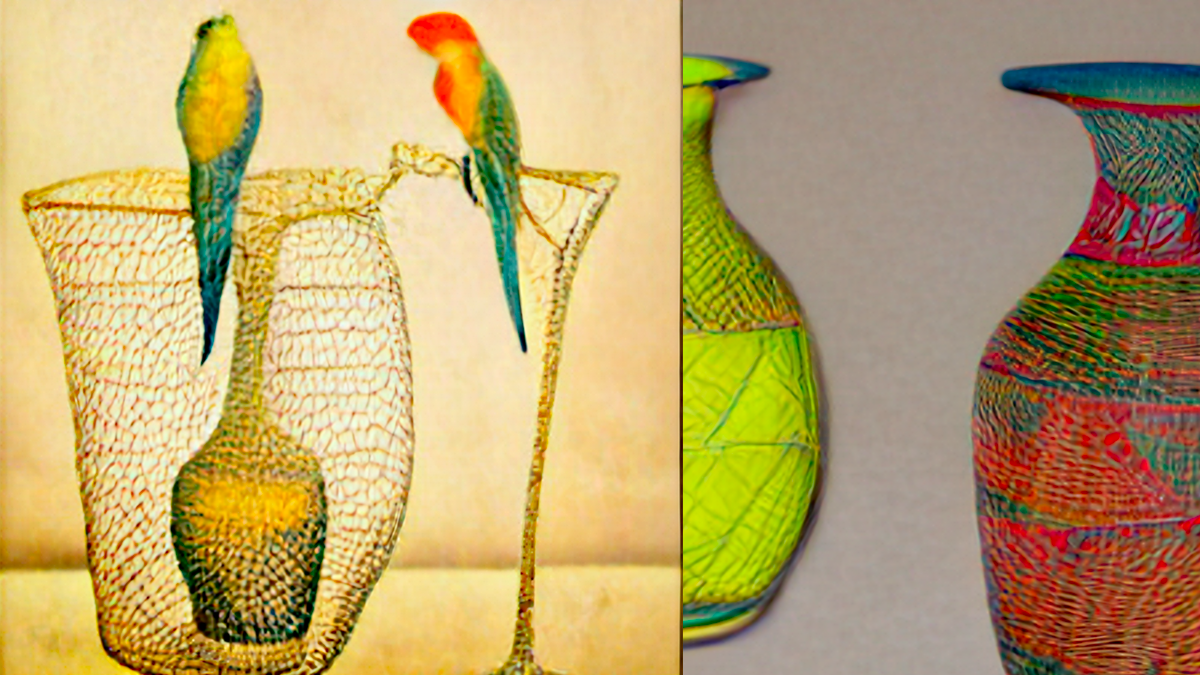

Transforming texts or images with NLP/NLU frequently gives the impression that the result lacks authenticity and sublime nuances. Dalle-E images created from text input appear perfect, and they can produce fantastic results by using a pre-trained model. An AI created the image above, which was then post-processed by another AI.

Origin of the term „Stochastic Parrots“

Four authors presented a paper titled “On the Dangers of Stochastic Parrots: Can Language Models Be Too Big?” late in the fall of 2020 at the Association for Computing Machinery Conference on Fairness, Accountability, and Transparency.

Their paper offered criticism of the natural language processing research being done at the moment. The criticism was about bias from the source data (text and images), energy consumption, and wrong results.

PreTrained Models

There are different architectures for these models (please note that BERT seems to be rather popular):

- BERT (RoBERTa, DistilBERT, CamemBERT, ALBERT,XLM-RoBERTa,FlauBERT)

- GPT, GPT-2, GPT-3

- Transformer-XL

- XLNet

- XLM

- CTRL

- T5

- Bart

- …

Using BERT as an example, BERT means Bidirectional Encoder Representations from Transformers. This is a good explanation of it, especially because of the term „Transformers“. Transformers are doing all the interesting things like text translations, creating text, or creating images. A program is getting an input, like a short sentence for example, and the program is using the pre-trained model (which is code as data) to predict (transform) an output.

Transformers were first introduced by Google in 2017. When NLP tasks were first introduced, language models used recurrent neural networks (RNN) and convolutional neural networks (CNN).

Why Pre-Trained Models?

Rather than learning new models from scratch, it is common practice to use models that have already been pre-trained. It is recommended that you do this instead of the alternative, which is to build models from scratch. It reduces the number of resources required to prepare models for pre-training. As a result, the operational costs of AI-based applications are reduced.

Doing information retrieval from various sources like social media and analysing word sequences, word embeddings, image classifications and so forth. The ml models created enable computer vision applications, machine translations (from EN to FR for example), or text processing applications. The data collection output is processed by deep learning algorithms and stored as an artificial intelligence model.

You’ll have to spend a significant amount of time training your model from scratch, depending on your previous experience not using a model. Not only because of the amount of source data, but also because a proper CNN architecture necessitates numerous calculations and experiments.

How many layers are required, what kind of pooling is necessary, and what kind of stack is required? Understanding the data set’s complexity is also important. The computational resources required to generalize your model may exceed your available resources.

As a result, a Pre-trained model is priceless because the difficult work of optimizing parameters is already done. The model can now be fine-tuned by adjusting the hyperparameters.

Size and Effect

The general assumption is that the size of a pre-trained model is improving the results it is producing. A better result like a higher resolution or more nuanced texts. A bigger model also means that it takes longer to train them . More training also means more costs and more CO2 emissions.

This is probably only a short-term concern because CPUs and GPUs are becoming more efficient, and decarbonization is lowering CO2 emissions.

The problem of implicit bias in sources is an interesting one, and it is referred to as the “Stochastic Parrot.” During the time that the pre-trained model is being developed, the training does not take into account the workings of the universe, nor does it evaluate philosophical or ethical questions. Processing a massive amount of data and deducing a structure from it is what it is doing right now.

This indicates that the model, or the process used to train the model, does not comprehend the significance of the data. A static, closed world view is what a pre-trained model is like; it cannot learn anything new and it can only repeat what it already knows. If the model is used by an application, the application will not extend or change the model.

Conclusions

The criticism about the pre-trained model is partly justified, for example, CO2 emissions are a problem. However CO2 emissions are a general problem, relevant to the whole economy including concrete production, steel making, and even BBQ.

Removing the bias would also mean removing the bias from the original sources. The original sources and data were produced by human authors over time, and as of now, it appears unlikely that an AI will create knowledge unsupervised without input. It is also unlikely that AI will understand truth or ethics soon.

The bias in the data and as a result in the model is an issue, but the real issue is not the bias, it is knowing the truth. Knowing the truth is even for humans an unresolved problem since humans do exist. A truth engine would be an even greater achievement than any pre-trained model.

This means we probably will have to learn to deal with „Stochastic Parrots“. Like a parrot it talks a lot but does not understand much.