Unraveling the Importance of Seed Values for Consistency and Reproducibility with Midjourney

- 8 minutes read - 1544 words

Table of Contents

Maintaining consistency and reproducibility is crucial for creators aiming to develop a unique visual language and cohesive style across their projects in the rapidly evolving world of AI-generated content.

One important factor in achieving this consistency is using seed values in AI content generation platforms like Midjourney.

Seed values play a significant role in controlling the randomness of the output, enabling users to generate similar results when working on multiple images or designs sharing a common theme or graphic style.

This article delves into the importance of seed values in content consistency, reproducibility, and collaboration for AI-generated content. The strengths and limitations of seed values in style development and character consistency will be discussed, and various approaches and techniques to overcome these limitations will be explored. By understanding the role of seed values in AI-generated content creation, creators can better use AI tools to develop captivating visuals while maintaining a consistent style throughout their projects.

Role of Seed Value for Content Consistency

The seed value is essential in AI-generated content creation for a few reasons:

- Consistency: By providing the same seed value for different text prompts or similar projects, you can achieve more consistent visual patterns and elements results. This is particularly useful when working on a series of images or designs that need to share a standard graphic style or theme.

- Reproducibility: Using a specific seed value allows you to reproduce the same or similar output multiple times. This can help fine-tune your content or make minor adjustments without changing the generated image completely. However, it’s essential to understand that due to the probabilistic nature of AI models, results might not be precisely identical every time, even with the same seed value.

- Collaboration: When working with a team or sharing your work, using a specific seed value can help others replicate your results or create similar content. This is beneficial for maintaining consistency across a collaborative project.

- Exploration: By trying out different seed values, you can explore various visual styles, compositions, and details, which can help you discover new creative possibilities.

It’s important to note that while seed values provide some control over the output, the AI models used by Midjourney, such as CNNs and potentially GANs or Diffusion models, are inherently probabilistic. Although using the same seed value may result in similar or nearly identical images, the outputs might not always be the same.

Moreover, seed numbers are not static and should not be relied upon between sessions. As AI models are updated or modified, seed values may produce different results. Therefore, it’s crucial to use seed values for consistency and exploration and understand their limitations regarding the AI models’ probabilistic nature and potential changes between sessions.

Range of Seed Value

To set the seed parameter, add the –seed flag followed by a whole number between 0 and 4,294,967,295 (2^32 - 1). That means there are more than 4 billion possible results for each text prompt.

Note: The seed parameter is more or less deterministic for Stable Diffusion

Midjourney Prompt Template

It uses Midjourney’s prompt templates to iterate through different results quickly. This speeds up testing because we don’t have to write the templates by hand, reducing the possibility of an error.

cinematic scene - {fast Steadicam shot, extreme close up, following}: a bird flies over the beach –seed {100, 200, 300} –v 5

Midjourney will create nine prompts:

cinematic scene - fast Steadicam shot: a bird flies over the beach –seed 100 –v 5

cinematic scene - fast Steadicam shot: a bird flies over the beach –seed 200 –v 5

cinematic scene - fast Steadicam shot: a bird flies over the beach –seed 300 –v 5

cinematic scene - extreme close up: a bird flies over the beach –seed 100 –v 5

cinematic scene - extreme close up: a bird flies over the beach –seed 200 –v 5

cinematic scene - extreme close up: a bird flies over the beach –seed 300 –v 5

cinematic scene - following: a bird flies over the beach –seed 100 –v 5

cinematic scene - following: a bird flies over the beach –seed 200 –v 5

cinematic scene - following: a bird flies over the beach –seed 300 –v 5

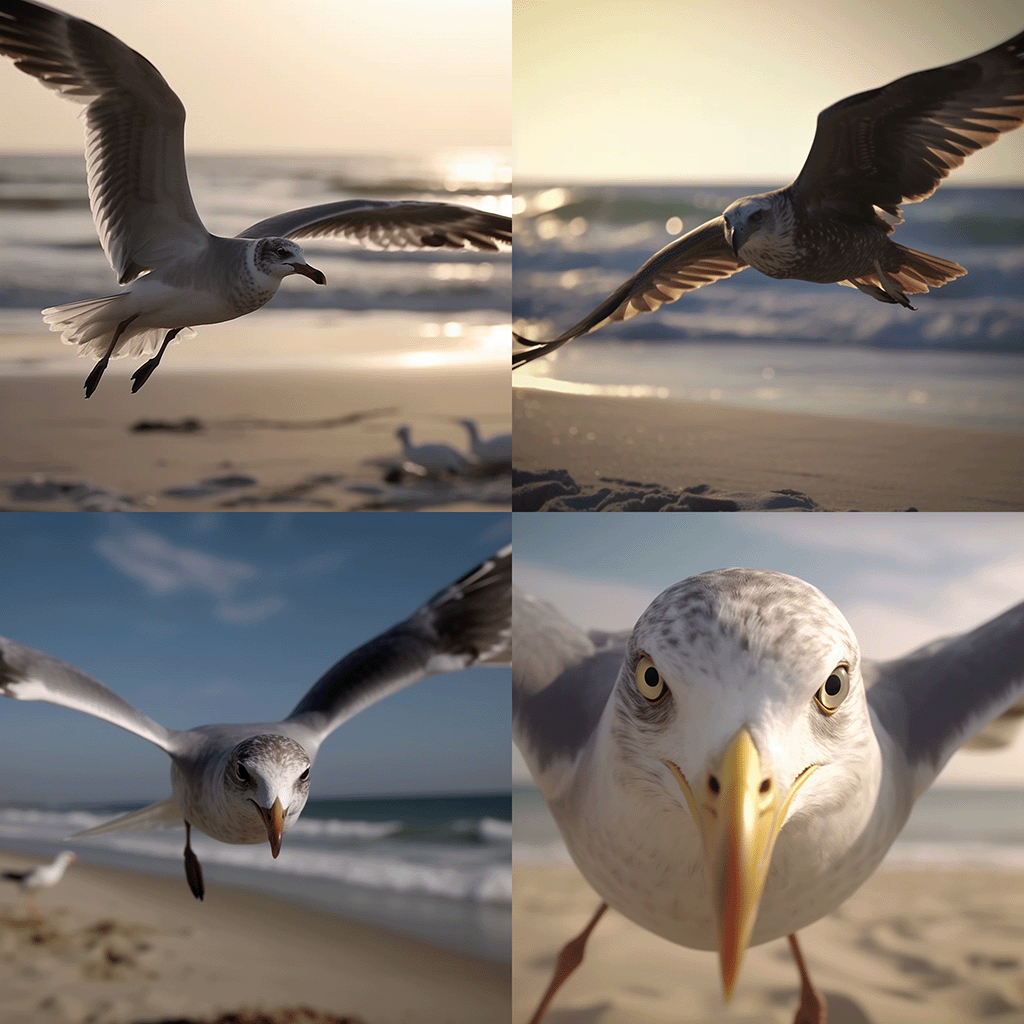

The images with “extreme close up” appear to be the most intriguing:

Seed 100

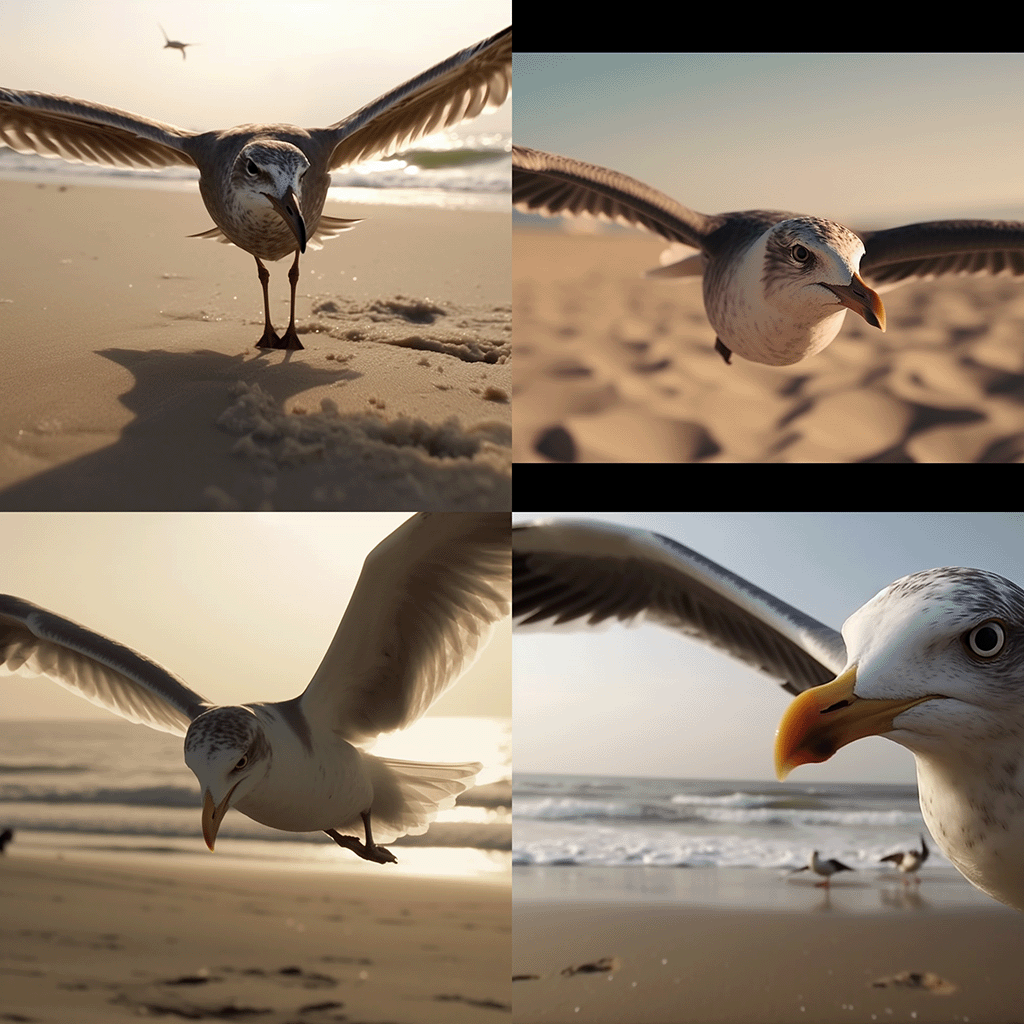

Seed 200

Seed 300

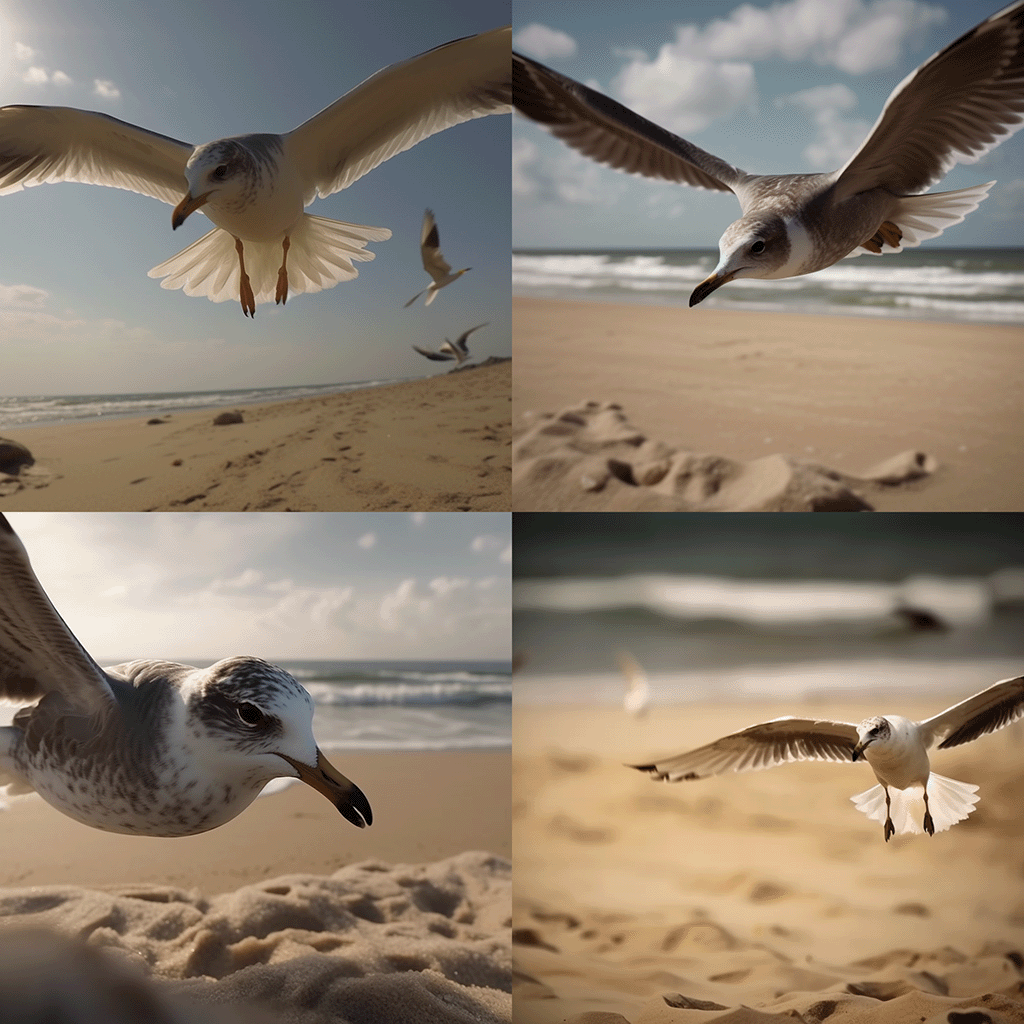

Seed 100 Re-Run

Compare Seed 100 Run 1 with Run 2

Prompt used:

cinematic scene - extreme close up: a bird flies over the beach –seed 100 –v 5

We obtain eight unique images regardless of which way we run the program. It can be shown through the use of Midjourney that the seed value is not determined in advance.

This means that the seed value cannot be used to develop the character’s style or maintain character consistency.

Overcoming Limitations of Seed for Style Consistency

While the seed parameter is meant to provide some control over the output and promote consistency, Midjourney’s AI models, such as CNNs, GANs, or Diffusion models, are inherently probabilistic . This means that even if you use the same seed value every time, the results may not be the same or similar.

When working with AI-generated content, it’s critical to understand the limitations of seed values, especially regarding style development and character consistency. In such cases, you may need to rely on other methods or tools to keep your project consistent, such as:

- Manual adjustments: Editing or refining AI-generated images by hand to ensure they match the desired style or character consistency. Possible but very time-consuming.

- Style transfer: Applying a consistent visual style across multiple images using AI-based style transfer techniques. Photoshop offers style transfer, but the results are often unpredictable.

- Fine-tuning the AI model: Fine-tuning the AI model on a dataset of images that match your desired style or character consistency to improve the model’s ability to generate images that meet your needs. Midjourney’s AI model cannot be fine-tuned.

- Image-to-Image: Blend allows some style consistency because Midjourney analyses the image concept and applies it to another image. Stable Diffusion offers image-to-image, which is less potent than the blend feature. Dalle-E 2 only allows image recreation, which offers little control.

It is critical to experiment with various approaches and techniques to achieve the desired consistency in your project while working with AI-generated content. It also makes sense to use different AI services in combination, like:

- Midjourney for mood, character, and style development

- LeonardoAI for asset development/storyboard

- Dreamstudio for mass production (via API)

Navigating Latent Space

AI-generated content platforms like Midjourney and Dall-E 2 rely on massive data to make associations and generate images. In the case of „A bird flies over the beach," the AI models learned from their training data that seagulls are the most common birds found on beaches. As a result of the high likelihood of this association, they generate an image of a seagull by default.

When forcing an AI to imagine something unlikely or outside of its usual associations, the results can be unstable because the AI model has a limited understanding of the concept in the latent space. AI models may struggle to generate accurate or stable images when certain concepts or associations are poorly represented in the latent space.

To overcome this limitation, try using more specific text or negative prompts to exclude unwanted elements from the generated images. However, because AI models are probabilistic, the generated results may still be unpredictable, and achieving stable images for unlikely concepts may remain challenging.

Conclusions

Finally, using seed values in AI-generated content creation platforms such as Midjourney gives creators control over the output randomness, which is critical for achieving content consistency, reproducibility, and collaboration. However, understanding the limitations of seed values is critical, especially when dealing with the probabilistic nature of AI models such as CNNs, GANs, or Diffusion models.

Creators can use a variety of approaches and techniques to address style consistency issues, such as:

- Although time-consuming, manually editing or refining AI-generated images can ensure they match the desired style or character consistency.

- Style transfer: Using AI-based style transfer techniques can aid in applying a consistent visual style across multiple images. The outcomes, however, may be unpredictable.

- Fine-Tuning the AI model: Improving the AI model’s ability to generate images with specific style requirements by fine-tuning it on a dataset of images with the desired style or character consistency. It should be noted that Midjourney’s AI model cannot be fine-tuned.

- Image-to-Image: Using image-to-image features such as Blend in Midjourney or Stable Diffusion to maintain some style consistency.

- Using different AI services in tandems, such as Midjourney for mood, character, and style development, LeonardoAI for asset development and storyboarding, and Dreamstudio for mass production via API.

Creators can better leverage AI-generated content creation tools to develop captivating visuals while maintaining a consistent style throughout their projects by experimenting with these approaches and understanding the strengths and limitations of seed values.