Exploring the Immensity of Latent Space in Stable Diffusion and Other AI Art Tools?

- 6 minutes read - 1278 words

Table of Contents

Trying to estimate the number of all potential outputs of stable diffusion. It becomes clear why having clear goals while navigating the latent space is essential. Evaluating how long it would take to create any possible output.

Estimating the number of potential outputs of sound diffusion is essential because it provides insight into the diversity and richness of the model’s generative capabilities. By understanding the size of the search space, one can get a sense of the potential creativity and flexibility of the model.

Why the size is interesting

The size of latent space is a measure of the amount of information that can be encoded in a given system. Latent space size isessentialt because it determines how much information can be stored in a system and retrieved later. Measuring latent space size can help determine its capacity.

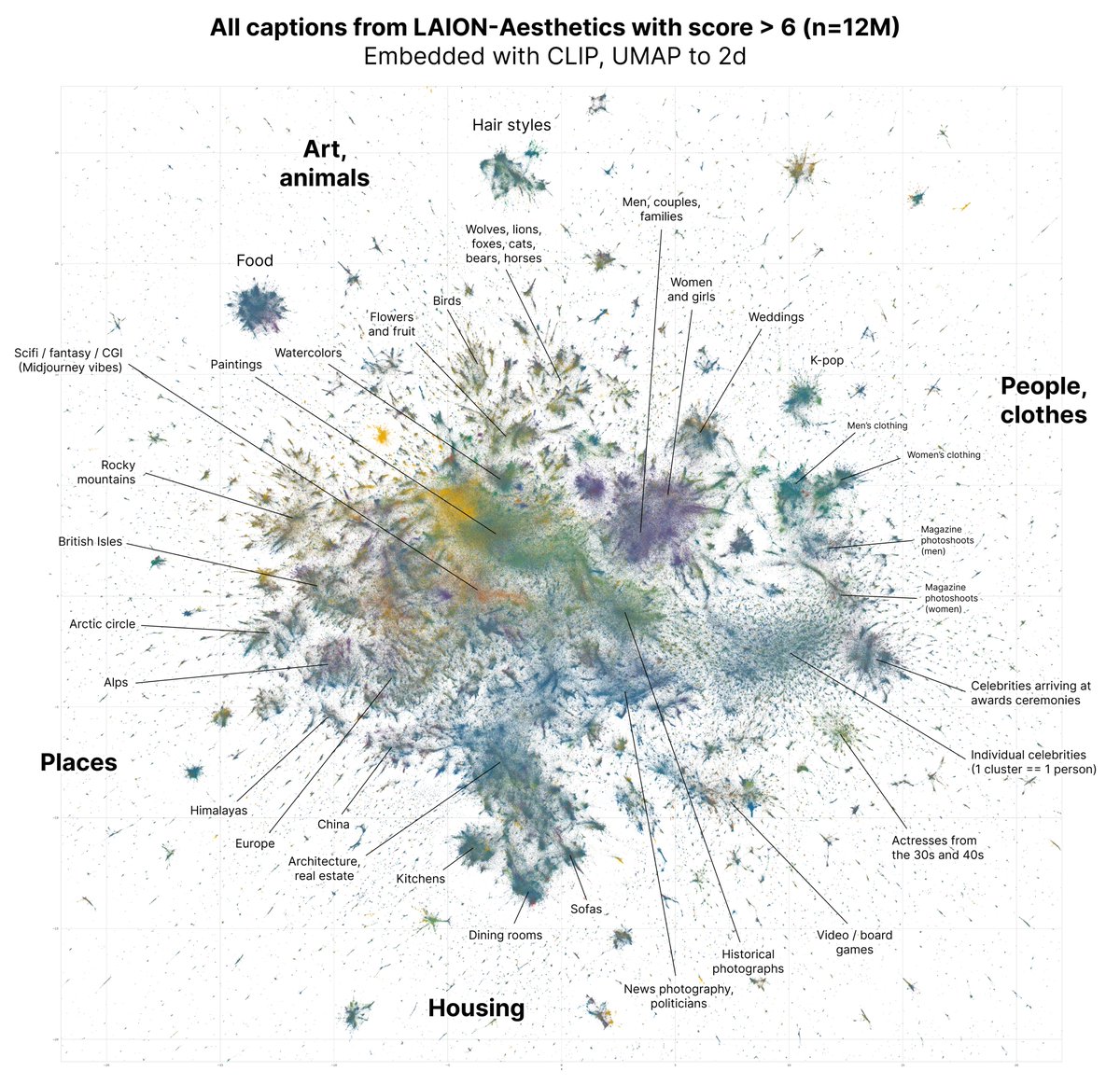

Usingsounde diffusion or similar image synthesis methods like Midjourney, you could get the impression that you can create infinite images. However, the input for training the weights and the text encoder are finite. As long as no emergent behavior can be observed (which appears very unlikely so far),

Latent space size also influences the probability of recreating existing output, limiting the likelihood of a collision with copyright issues. Finally, measuring latent space size for stable diffusion and similar concepts can help assess its full potential.

How can the size of latent space be measured?

Usually, the latent space is described by its dimensionality, not by the number of possible outputs. In the case of content synthesis the number of potential outputs is interesting to understand the potential of, stable diffusion.

The size of latent space is related to the complexity of the system and its ability to generate new patterns. Systems with more complex structures tend to have larger latent spaces, while systems that are less complex tend to have smaller latent spaces. In other words the more input the more output.

Measuring the size of latent space can help users understand how information is encoded in an AI model, and also help to understand how much data needs to be collected to create more complex models. Higher complexity also means more data and more GPU training time, this is telling you something about how fast future models will evolve.

Input size of stable diffusion

The size of latent space is determined by the number of its input to create the models. The more input variables used, the larger the latent space will be. Different combinations of input variables can result in different sizes of latent space.

The size of latent space has an impact on the accuracy and quantity of the predictions made by the model. However, models wsizeable sizeablelarge latent space may be more prone to overfitting than those with a smaller latent space.

Source: https://twitter.com/clured/status/1565399157606580224

Calculation an estimated size

Keeping in mind that this is a tought experiment, to illustrate the size of the stable diffusion model (also called weights or checkpoint ).

The weighted model of stable diffusion contains an abstract representation of all its image input, for the training 2.3 billion images were used. The Image Input I = 2.3 Billion images, and SD can combine any image with any image which means I! combinations:

I! = 2,300,000,000!

Ignoring languages, there are about 1 million - logical - words W (using English as a reference) W!

W! = 1,000,000!

Assuming that styles, artists, and camera positions are special words, you can assume that there are at least 3000 different modifiers M,

M! = 3000!

There is also the seed (Smax) that is unsigned long for Stable Diffusion, 4,294,967,295.

Smax = 4,294,967,295

The inference is combining the abstract „knowledge“ it got from the training, with the prompt input (text and modifier). The formula to get an estimate for the potential volume (Vls) of the Latent Space for Stable Diffusion (and similar AI text-to-image tools) is:

Vls = I! x W! x M! x Smax

Vls = 2,300,000,000! x 1,000,000! x 3,000! x 4,294,967,295 = 10^(10^10.31257235021375)

Collisions

There are about there are approximately 14.3 trillion photos in existence as of 2024, the canon of existing images (C). The number of images taken is much higher (trillions) but they are not shared, they can not be reproduced. They are probably not relevant for a copyright discussion.

Probability (P) to create an image that is almost identical to an existing image:

P = C / Vls = C / ( I! x W! x M! x Smax)

P = 750,000,000,000 / (2,300,000,000! x 1,000,000! x 3,000! x 4,294,967,295) = 10^(-10^10.31257234996265)

The probability of „colliding “ with an existing image is about a 20th billionth. The probability to win the lottery is about (https://en.wikipedia.org/wiki/Lottery_mathematics ) 7.1511238420185162619416617037867203058163808791534442386827… × 10^-8 (period 10626), 1 in 13,983,816.

That means winning the jackpot of a lottery is much higher than colliding with an existing image!

Size of the result space

The possible result space is for an example a 2k image with a color depth of 32bit, 2Kspace.

2Kspace = (2,073,600 x 4,294,967,295)!

and

2Kspace > Vls = True

Which means that even if the latent space is huge, it cannot create any possible image combination. Assuming an inference takes 20 seconds, it would take - a very long time - like trillions of trillions of years to create any possible image.

(2,300,000,000! x 1,000,000! x 3,000! x 4,294,967,295) 20 / 60 / 60 / 24 / 365) = 10^(10^10.31257235008270)

Conclusions

Even if it is only a thought experiment, it shows that for example you cannot create any possible image, and that the potential result space (not the useful result) space is incredible vast. Emergent behavior of the stable diffusion model can be ruled out or should be ruled out, because the training process and the architecture of the huggingface pipeline show no indication that some emergent magic is happening.

Interestingly stable diffusion cannot create any possible image even for a small 2k image at 32 bit. Because of the vastness of the latent space of stable diffusion it is important to have a specific goal in mind using it.

It is very difficult to prove the size of latent space by brute force, it would mean to create any possible input (prompts and parameters) and to prove somehow that the input is really complete, or what complete means. It would also consume a lot of computer power and time, a quantum computer might be able to create all possible inputs and outputs at once (at least in theory).

The completeness of all possible input would imply to know everything, which we do not. According to some philosophers it is impossible to know everything. In other words it is impossible to prove the real size of the latent space of an AI model.