Gödel's Theorems and the Inherent Challenges in AI Alignment

- 5 minutes read - 886 words

Table of Contents

The rapidly evolving field of artificial intelligence (AI) has brought many opportunities and challenges. As AI systems become increasingly integrated into various aspects of our lives, addressing the philosophical and technical complexities surrounding AI alignment is crucial.

This post delves into the intricacies of aligning AI systems with human values, preferences, and social dynamics, as well as the implications of Gödel’s incompleteness theorems on our understanding of AI and the world around us.

This text is based on Interview with an AI about philosophy (GPT-4) .

The Inherent Limitations Imposed by Gödel’s Incompleteness Theorems

Kurt Gödel’s groundbreaking incompleteness theorems shed light on the fundamental limitations of formal systems, including mathematics and logic. These theorems imply that within any consistent axiomatic system, there will always be statements that cannot be proven true or false. Gödel’s insights have far-reaching implications, extending to human understanding and AI systems, as they are constructed based on formal mathematical and computational principles.

Although Gödel’s theorems do not prevent us from acquiring more profound insights into the universe and ourselves, they suggest that we may never fully understand or comprehensively describe everything within a single, consistent formal system. These limitations have profound implications for AI alignment, highlighting the inherent complexities and uncertainties associated with understanding and modeling human values, preferences, and social dynamics.

As a result, AI alignment may never be complete or perfect, but this doesn’t mean it’s not a worthwhile pursuit. Instead, it emphasizes the need for continuous research, development, and adaptation in AI alignment.

Efforts should focus on designing AI systems that are safe, robust, and adaptable, capable of learning and updating their understanding of human values and preferences over time.

Additionally, involving diverse perspectives and fostering collaboration among AI researchers, ethicists, social scientists, and policymakers can help ensure that AI systems are designed to better align with human values and society’s complex and evolving nature.

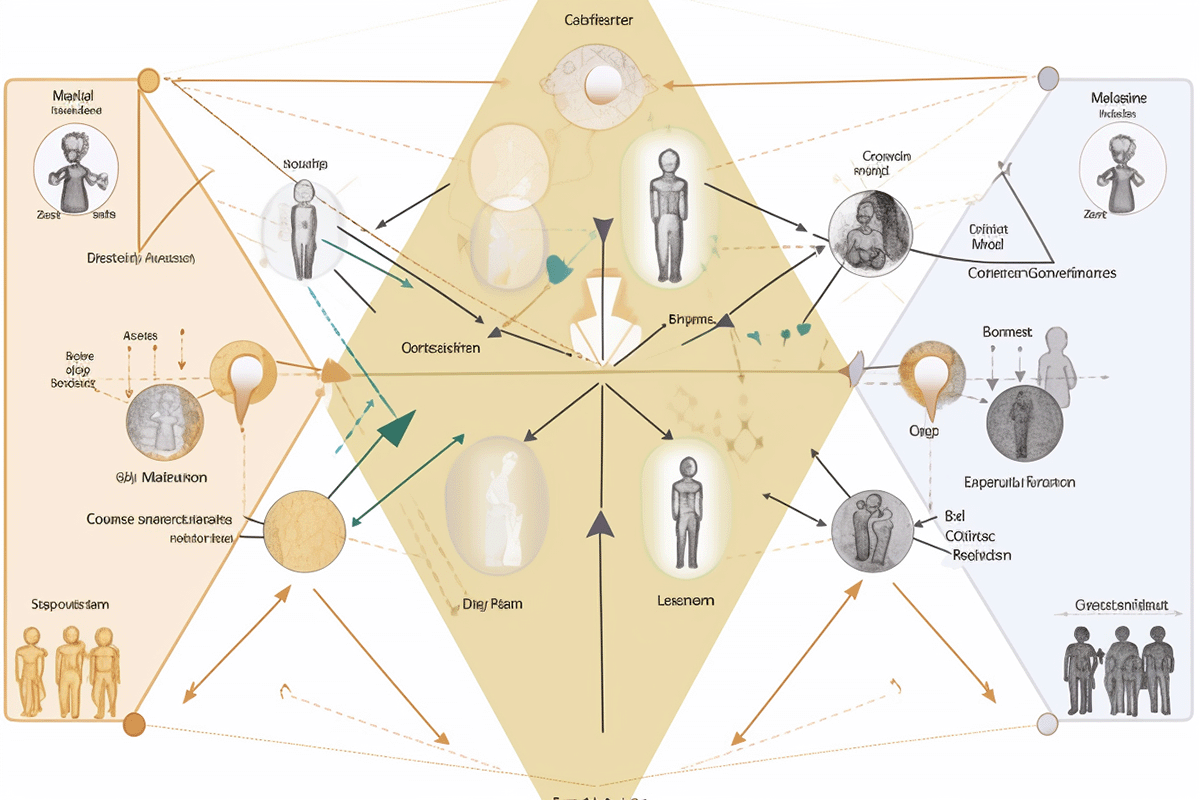

AI Alignment: A Multifaceted Problem Spanning Philosophy and Technology

AI alignment is a multifaceted problem, encompassing philosophical and technical dimensions. Philosophically, AI alignment raises questions about ethics, morality, and human values.

It seeks to determine how AI systems can be designed to respect and promote human well-being, considering the diverse values and preferences held by people from different cultures and backgrounds.

This aspect of AI alignment involves grappling with complex and often contested philosophical concepts, such as the nature of good and evil, rights, and justice.

Technically, AI alignment necessitates the development of AI systems that can comprehend, interpret, and respond to human values and preferences securely and dependably.

This entails creating algorithms and mechanisms that enable AI systems to learn from human feedback, generalize from limited data, and adapt to novel situations and contexts. It also addresses robustness, interpretability, and fairness issues in AI systems.

The Intersection of AI Alignment and Popper’s Objective Knowledge

The connections between AI alignment and Karl Popper’s “Objective Knowledge: An Evolutionary Approach” are noteworthy. Both emphasize the iterative and evolutionary nature of knowledge and understanding.

In Popper’s perspective, learning is never static or final; instead, it is an ongoing, iterative process of conjecture and refutation, with our understanding evolving and improving over time. Similarly, AI alignment is a continuous process, as AI systems must constantly adapt and learn from human feedback to better align with human values and preferences.

Popper’s falsificationist approach to the philosophy of science acknowledges the inherent fallibility of knowledge and the need to remain open to change based on new evidence or insights.

In AI alignment, recognizing that AI systems can make mistakes and that our understanding of human values may be imperfect necessitates AI developers to design systems capable of learning from mistakes, incorporating new information, and adjusting their behavior accordingly.

Popper’s approach to objective knowledge and the pursuit of AI alignment call for collaboration across various fields of study. Popper’s work encompassed philosophy, science, and social sciences, as he sought to understand the nature of knowledge and its evolution.

Similarly, AI alignment requires input from experts in philosophy, ethics, computer science, social sciences, and other fields to ensure that AI systems are developed.

Conclusions

In conclusion, Gödel’s incompleteness theorems highlight the inherent complexities and uncertainties in understanding and modeling human values, preferences, and social dynamics for AI alignment.

These theorems suggest perfect AI alignment is impossible within a single, consistent formal system. However, this should not deter efforts in the pursuit of AI alignment. Instead, it underscores the need for continuous field research, development, and adaptation.

By designing AI systems that are safe, robust, and adaptable, capable of learning and updating their understanding of human values and preferences over time, we can work towards more aligned AI.

Involving diverse perspectives and fostering collaboration among AI researchers, ethicists, social scientists, and policymakers can help ensure that AI systems are designed to better align with the complex and evolving nature of human values and society.

Embracing the challenge of AI alignment in a Gödel-influenced world is vital for shaping a future where AI technologies benefit humanity in a safe and ethically responsible manner.